Modern Datawarehouse Dynamics365 Finance and Operations

Hello the Community !

Hope you are doing well and had great holidays. Let’s start new season with a global new article on something I like a lot as you have maybe noticed on previous articles on my blog for few months : Datawarehouse, Data Lake in all is glory, BI Reporting for your loved ERP system : Dynamics 365 Finance and Operations.

This is a new global post on technical end-to-end architecture. I will present a new capacity to push data coming from Tables and Entities into an Azure Data Lake Storage in real time as well as how to use it after with Azure Synapse Analytics and PowerBI. How to build a Modern Datawarehouse architecture for your ERP and expose custom views & model for your business users in PowerBI. How to use DirectQuery mode with SqlOnDemand mode (pay as query mode - serverless) + Azure Analysis Services. You will find at the end of the article my global DEMO in Youtube : Let’s jump in ; before take some coffee :)

For few projects that I’ve seen it’s always a key changer : propose an end-to-end global architecture for your ERP and other data sources in 1 Business Datawarehouse. As part of PowerBI and PowerPlatform you already know that Microsoft provide an easy Reporting tool (like PowerApps or PowerAutomate) to let the business users build their own reports and KPI. PowerBI is number 1 in the world say Gartner. Of course, it’s always the Business users that can provide the best their needs and what they want for a day-to-day analysis and PowerBI can really help them to achieve this goal. But there is always a “but” :)

PowerBI Desktop is not complex to learn how to build your report but I always seen this problem on a Dynamics 365 F&O project :

Can you explain the Model behind ? Oh god….. in this operation you will loose 75% of people, believe me ! Clearly a nightmare

Can you extend a little with other datasources ?

Is this really real time refresh ? Considering I need to be sure that if I need to provide some KPI to Auditor, the Board or Top Level Management I need to be sure of the results

What is the relationship if I need to cross data ?

So here we are, you are now stuck after providing a good training on PowerBI Desktop (very similar as Excel in PowerPivot / PowerQuery) - they can’t really build on their own some reports, since you will spend (meaning the IT Team) a lot of times for few months to be the PowerBI guide.

So, Why is Data Warehousing Important?

Data warehousing enhances the quality of business intelligence, and so managers and executives do no longer need to make business decisions based on limited data or their gut. With all kinds of data stored in one place, data warehouses allow organizations to quickly make informed decisions on key initiatives with little or no IT support. IT can thus experience improved productivity levels as they get to focus on governance role rather than the daily operational role. This allows enterprises to offer delightful customer experiences and become easier to do business with. Additionally, companies that are well-versed with data warehouse concepts are likely to generate more revenue.

As you also imagine, it will help the Business users to provide simple 1 global basic query based on some few data sources in 1 place :

No technical unknown field names

Relationship already done - crossing data already done for them

Provide one global query for each KPI / Reporting needs : Like Purchase, Sales, Inventory, Expenses, Financial, Margin, Turnover, Product , Cashflow etc…

Provide a way to give to them the most recent data sources as needed

1 Global model to be the same for every users

Users will do this way only the Visualization and filtering work on PowerBI !!

First of all before to go directly to this new type of architecture, I will very highly recommend to go through my most reading article about Azure Data Lake in all is glory, of course with also Dynamics 365 F&O (but not only) : In fact, i will again use this Azure component once more time in this architecture, with the CDM format : so I will not cover again this part and copy/paste everything =) You can jump in as like a prerequisite, like Microsoft like to do ; like a spider web Link everywhere in the Microsoft documentation ! 😗

For few customers, I already used (like in the article below) Entity Store - Aggregate Measurements for PowerBI with Dynamics 365 Finance and Operations DATA.

While it was good to see that Microsoft push this (aka AxDTWH) directly as CDM format in an Azure Data Lake Storage, it’s still quite complex about near real time reporting based. You will have to schedule like me a batch “Full Reset” or Even “Incremental Update” but it can take a lot of resources to push back transactional data into ADLS. And the main backward/lack was about the standard AggregateMeasurements. It’s often already aggregated data with some business measures and strange calculation for KPI - somehow you will have to customize it - extend it like me and propose all of other new cubes for Reporting purpose : so this is required some developments. I really wanted to push simple tables or entities data back into my ADLS without any new development on F&O side and do this kind of BI Modeling stuff in Azure itself before doing some reports in PowerBI !

Of course this is also a recommandation from Microsoft directly. AS you can see here in this article, don’t use OData API call from PowerBI but use Entity Store instead (like me : good to know !) - Indeed I’ve seen other typical projects with some PowerBI Reports with a Full global call 8 times (PowerBI Pro Limitation) per day to retrieve all data back as importing data mode from D365 F&O to PowerBI ! Oh gosh, imagine you call the Data Entity from OData with 3 millions of lines in LedgerJournalTrans, it’s not incremental : yes you will have some performance issue ! :)

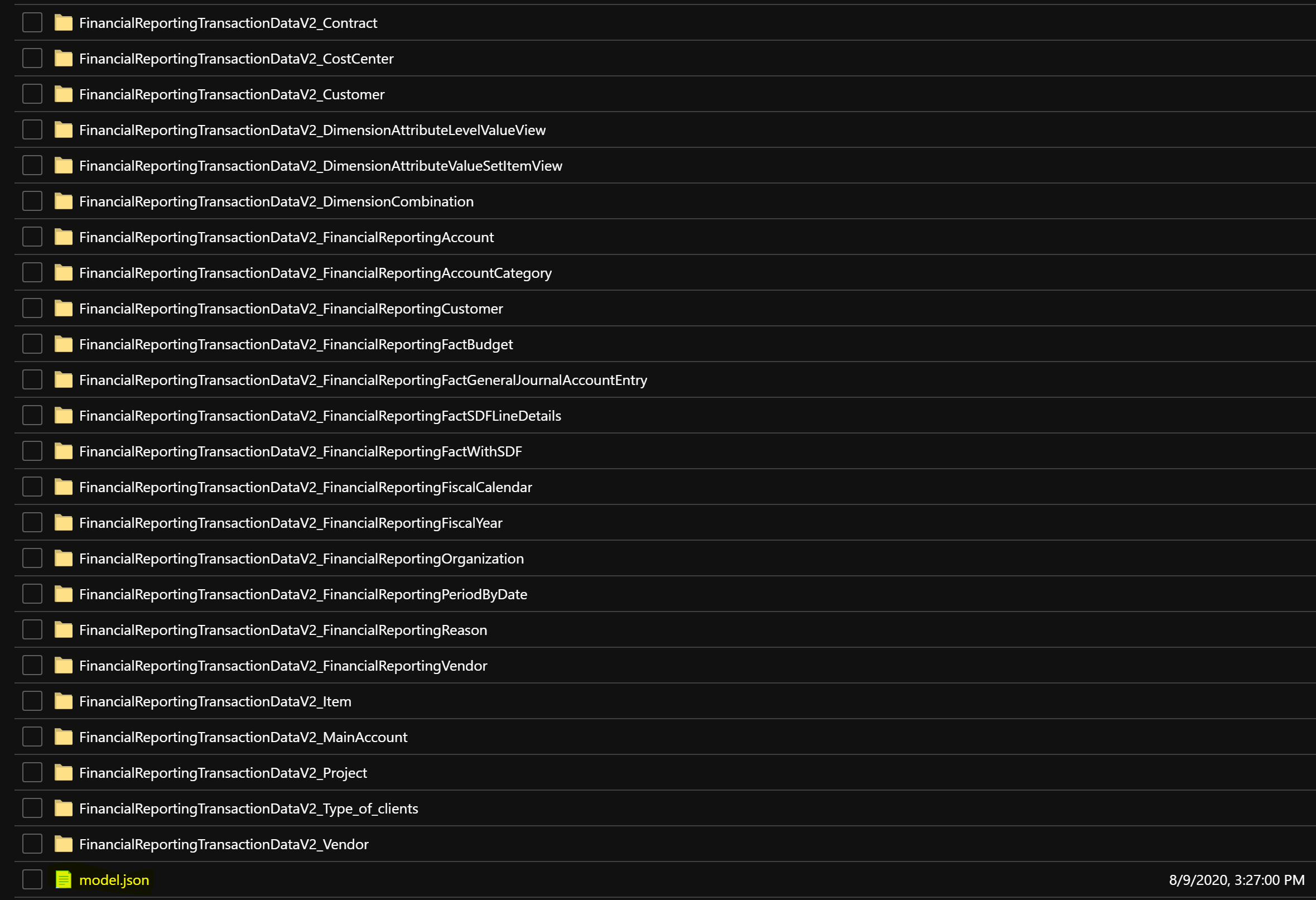

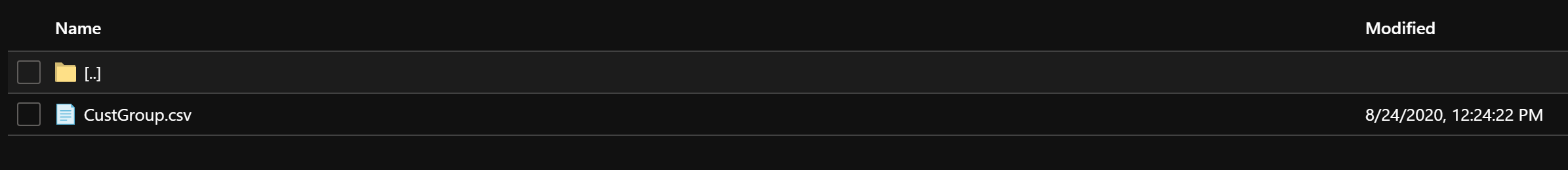

As a reminder, here is now my Azure Data Lake with a Sandbox instance Dynamics 365 F&O

As you can see, I still have my AggregateMeasurements - EntityStore inside my own ADLS as a main folder right now. As a good teasing, you can already see some new stuff coming up : like Tables and Entities ! You will have to read the whole article to understand :)

Inside my Entity Store, I have of course all way all views as folders ; inside a CSV file generated and a model.json for CDM format purpose and metadata properties (including column names & data type)

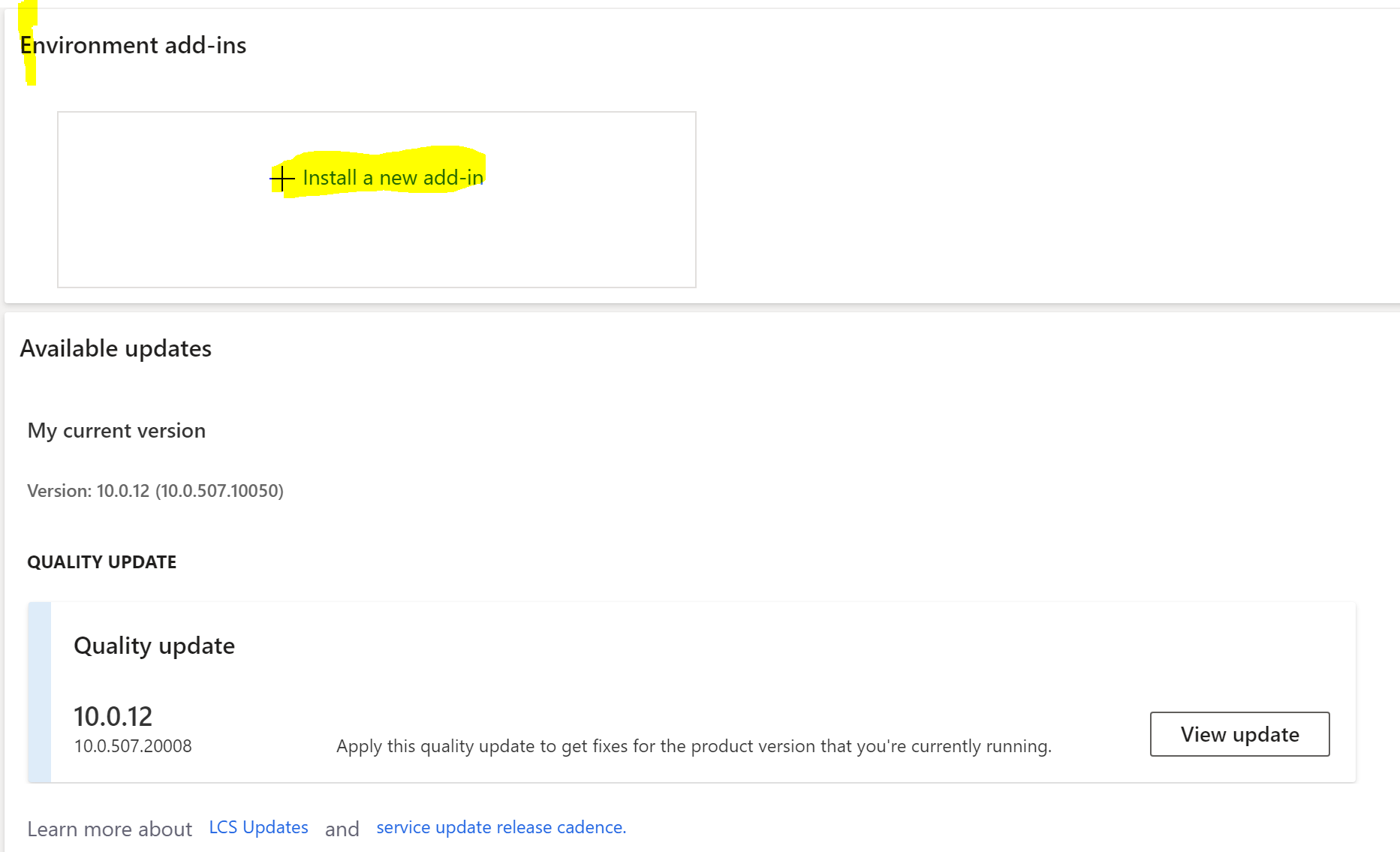

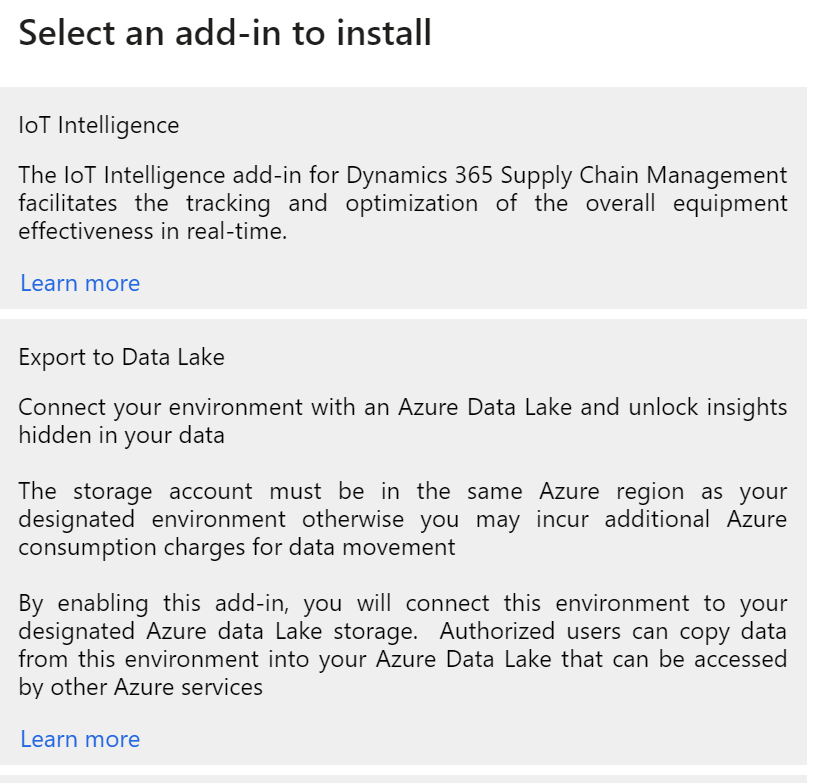

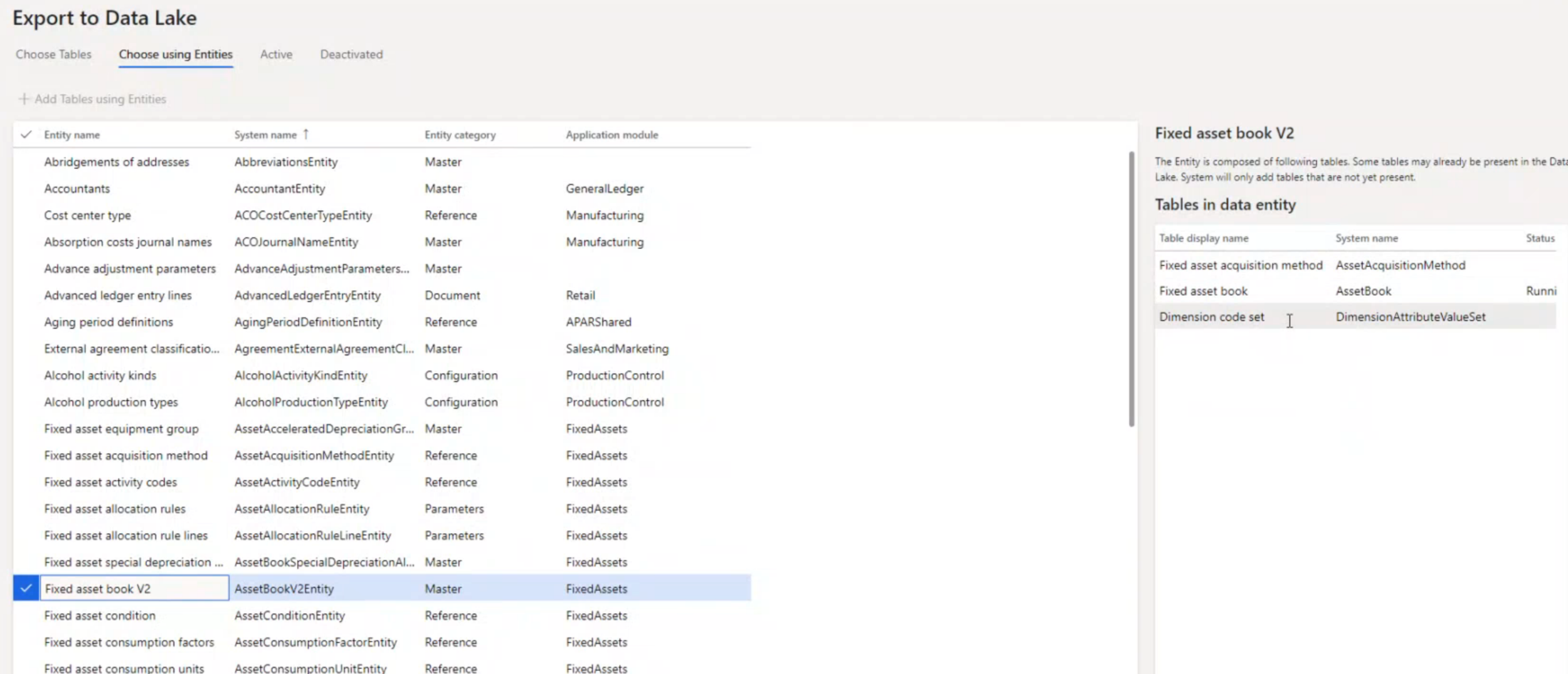

Also as a reminder, you can already add & setup the ADLS setup directly in LCS as an Addin, like this :

Already explain this part / setup also in my previous article about Dynamics 365 AI Apps for F&O : Finance Insights !

As you will see in this article, before calculate and generate some insights with A.I Builder of the PowerPlatform, you will have to push & activate few AggregateMeasurements in the Data Lake : so another reason so sync everything in an ADLS and not only for PowerBI but also for A.I and Azure ML model.

So now let’s talk about Tables and Entities ; after the teasing before, you have seen 2 new folders into my ADLS. But where this coming from ?!

Well I will have to first present you something that it’s not really (yet) available but will be soon released. 2 new features already in the Microsoft documentation. It was normally plan for 10.0.13 + PU37 (already in Preview stage) version but Microsoft is still somehow in development on his side.

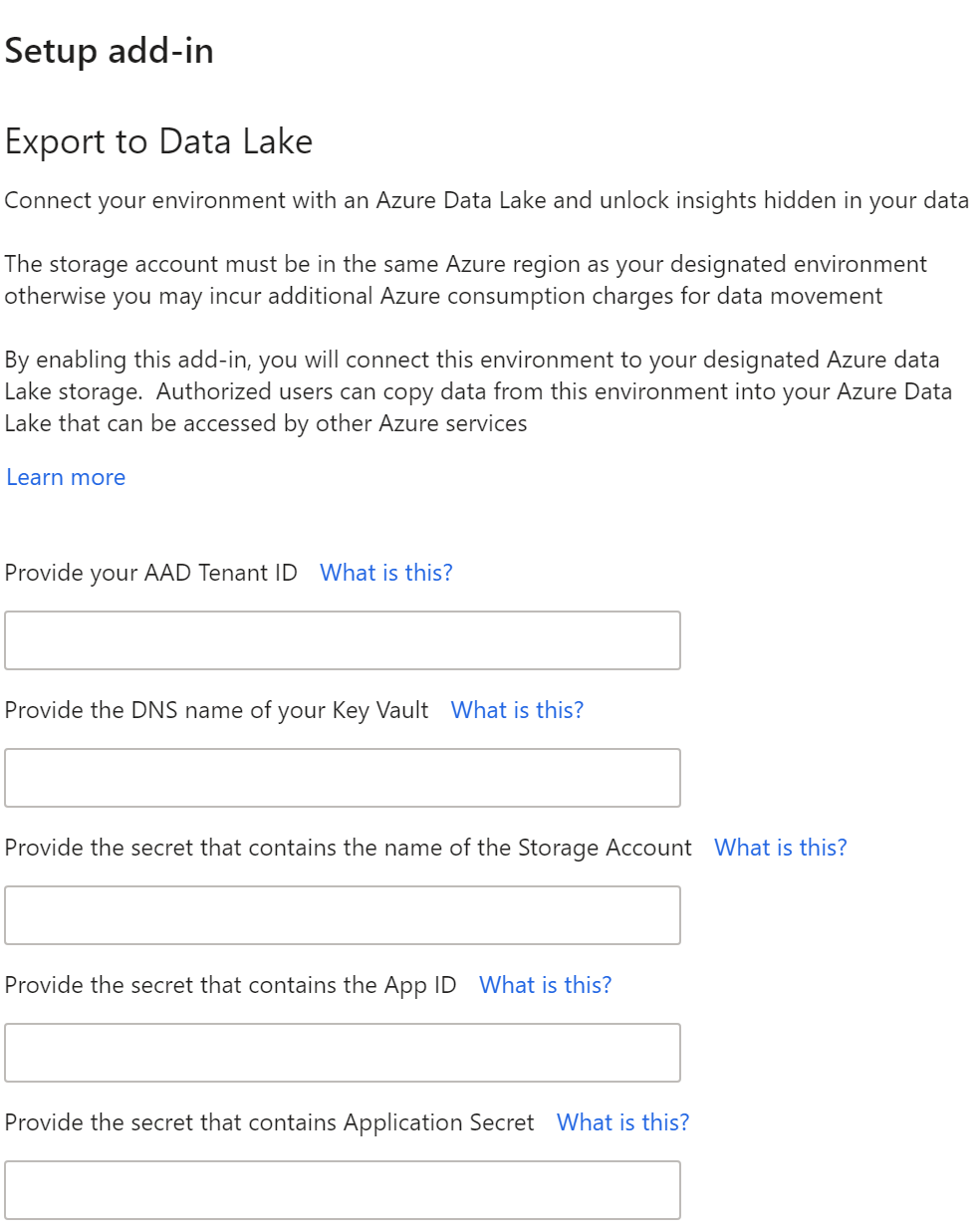

After deploying the Preview of 10.0.13 on my side I had this error message

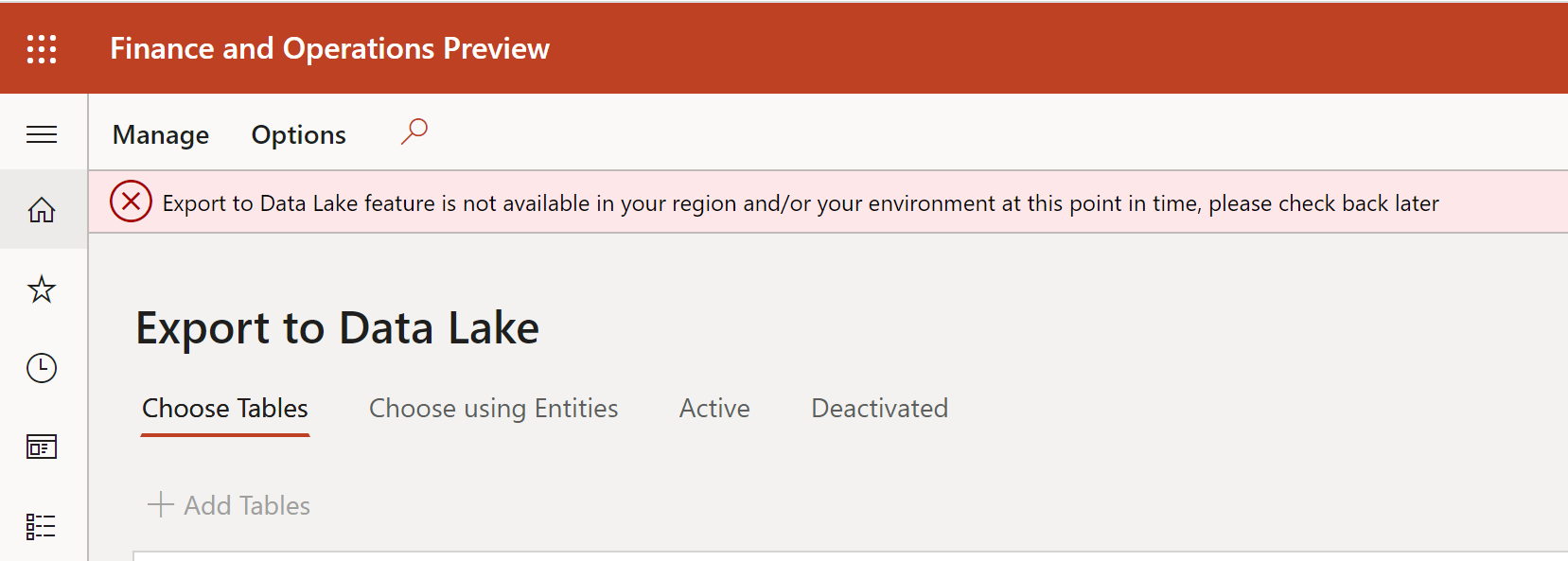

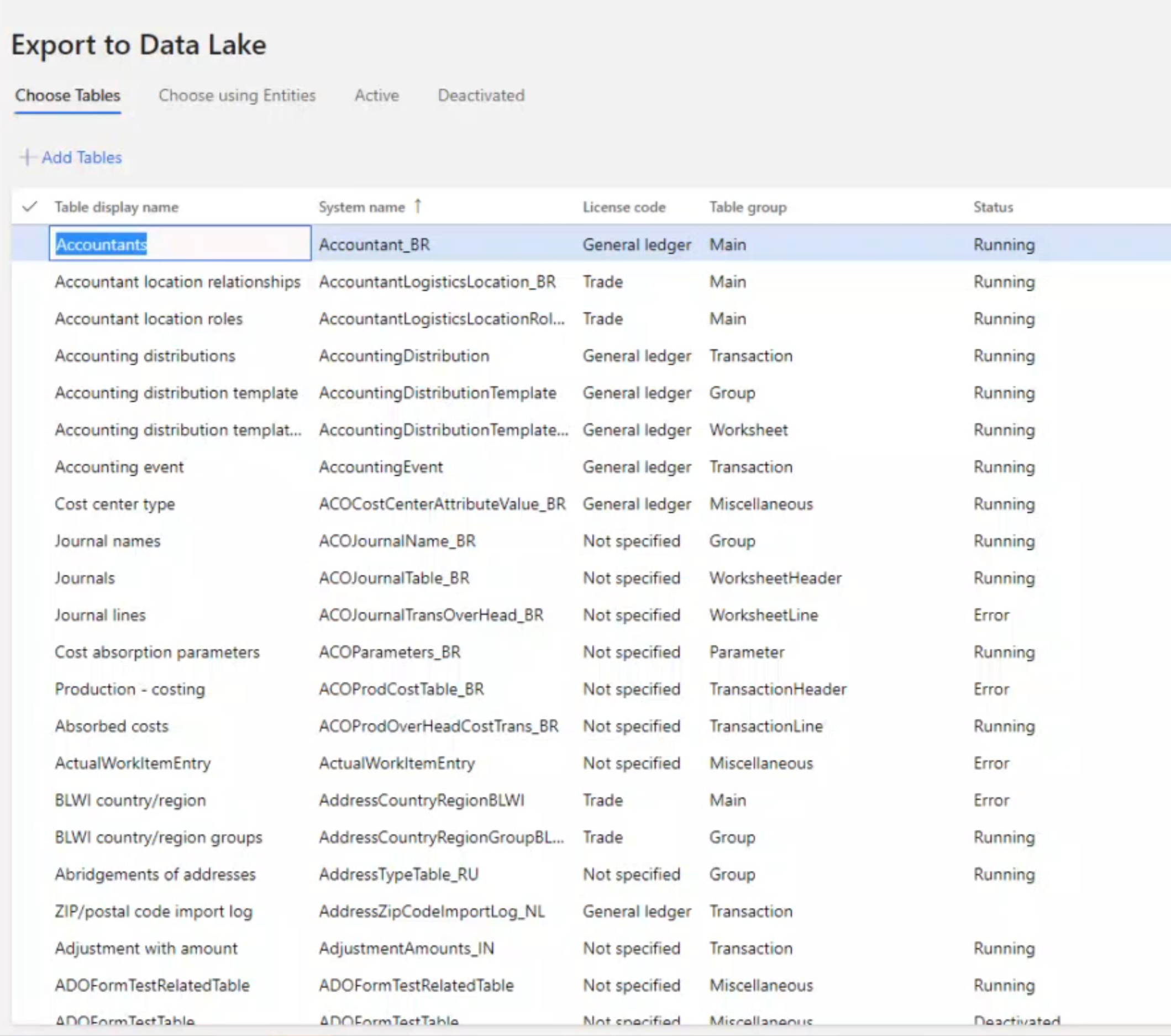

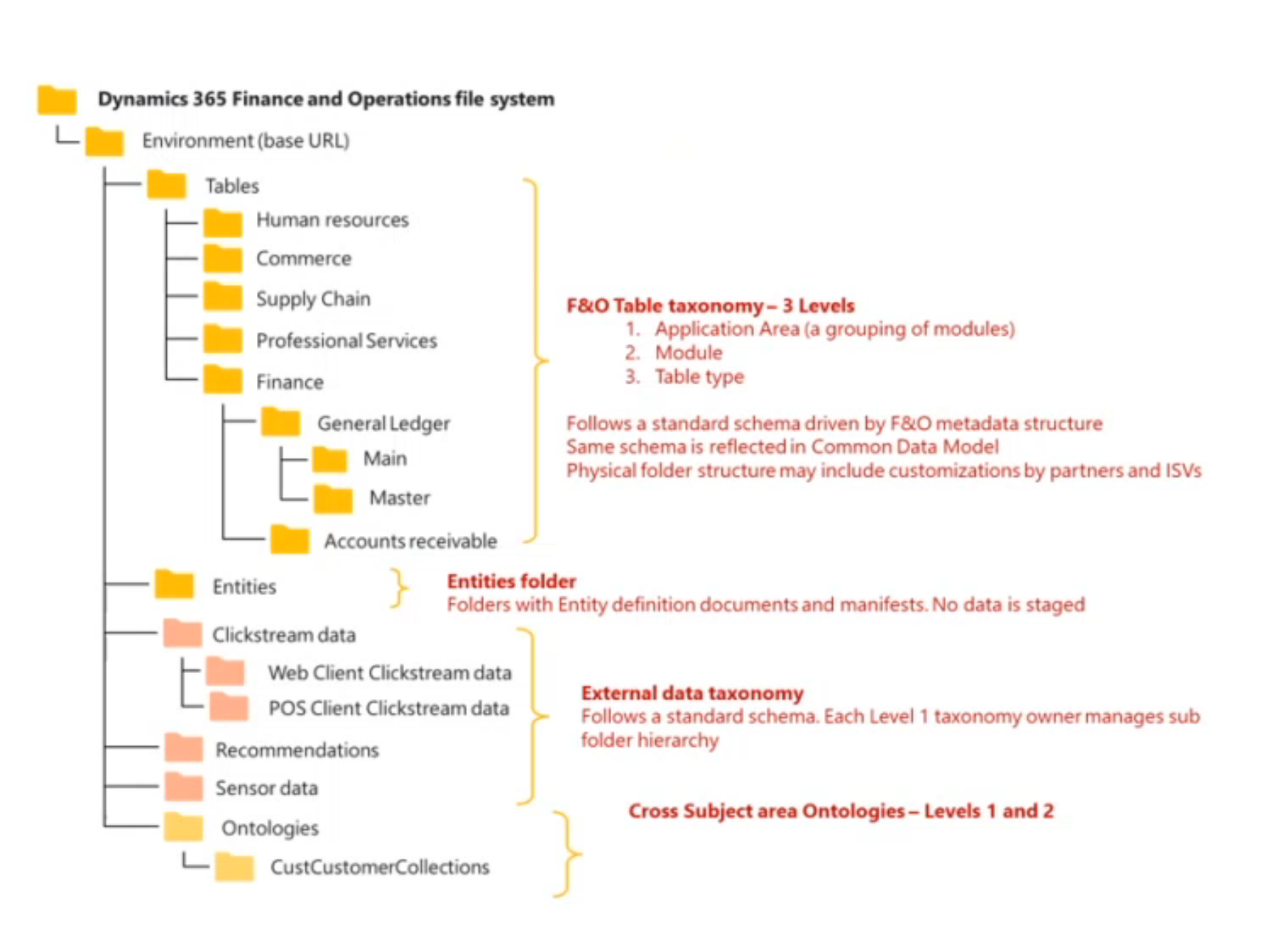

As you will see after Microsoft provide some direct configuration in order to push your tables and entities into your ADLS ; like this :

The structure into your ADLS will be like this at the end :

Source : Microsoft FTE

The sync will be near real time, yes you read it right ! After updating records and create new one, it will be sync in real time into ADLS (like PowerPlatform already does with the Common data service into ADLS in fact)

Just for ERP system and since the amount transactions can be large - I really want to test it ASAP to do a large performance testing. I will do that and let you know.

At the end here will be your main global of Datawarehouse that I will cover just after - so it will help you to understand each components :

Here is like usual with me an unsexy schema :

I will cover more in details the part of Azure Synapse and PowerBI at the end of article.

Good news for you, you can meanwhile use a Github project right now by Microsoft FastTrack team in order to test it (without the feature explain before) - see just after this presentation.

Currently, the global architecture schema for a Modern Datawarehouse can be represented like this :

Source : Microsoft Documentation

Here the Business Apps will be of course your Dynamics 365 F&O instance. The step 1 will be replaced after by the previous feature ; right now for a Proof of Concept it will be with Azure Data Factory (ADF)

Data Flow

Combine all your structured, unstructured and semi-structured data (logs, files, and media) using Azure Data Factory to Azure Blob Storage.

Leverage data in Azure Blob Storage to perform scalable analytics with Azure Databricks and achieve cleansed and transformed data.

Cleansed and transformed data can be moved to Azure Synapse Analytics to combine with existing structured data, creating one hub for all your data. Leverage native connectors between Azure Databricks and Azure Synapse Analytics to access and move data at scale.

Build operational reports and analytical dashboards on top of Azure Data Warehouse to derive insights from the data, and use Azure Analysis Services to serve thousands of end users.

Run ad hoc queries directly on data within Azure Databricks.

Components

Azure Synapse Analytics is the fast, flexible and trusted cloud data warehouse that lets you scale, compute and store elastically and independently, with a massively parallel processing architecture.

Azure Data Factory is a hybrid data integration service that allows you to create, schedule and orchestrate your ETL/ELT workflows.

Azure Blob storage is a Massively scalable object storage for any type of unstructured data-images, videos, audio, documents, and more-easily and cost-effectively.

Azure Databricks is a fast, easy, and collaborative Apache Spark-based analytics platform.

Azure Analysis Services is an enterprise grade analytics as a service that lets you govern, deploy, test, and deliver your BI solution with confidence.

Power BI is a suite of business analytics tools that deliver insights throughout your organization. Connect to hundreds of data sources, simplify data prep, and drive ad hoc analysis. Produce beautiful reports, then publish them for your organization to consume on the web and across mobile devices.

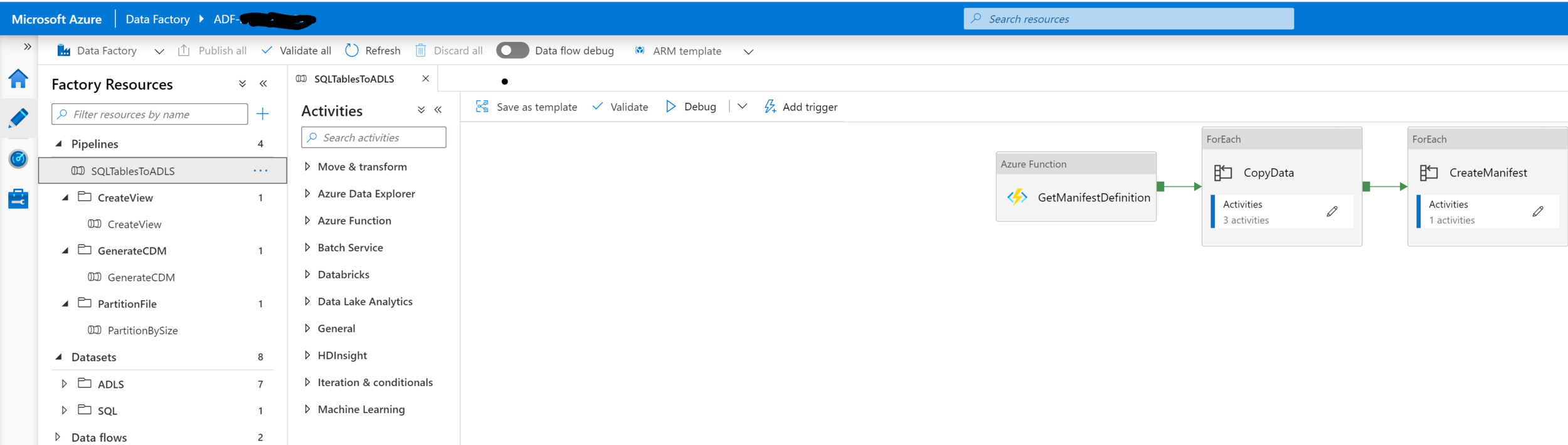

Project SQLFullExportToADLS has been released with a V2 right now in Github ; of course you can download it in order to test right away :

I will not cover every step of configuration, since Microsoft has provided already a good How-To setup in Github directly ; as well as a ReadmeV2 file. It just took me 2 hours to initialize & configure it.

Reminder ; you will need :

Azure Data Factory

An Azure Data Lake of course : you can use like me the same ADLS as used for Entity/AggregateMeasurements if you have already one.

Azure Synapse Analytics

Azure Function

An instance of Dynamics 365 F&O / Like me you can still access on a TIER2 instance (not Self-Deployment type) you will be able to copy your production instance and also install an Integration Runtime in order that Azure Data Factory pipeline can reach your SQL Azure instance

Or You can use also a TIER1 development instance - as well as a Cloud Hosted Environment : doesn’t matter - but yes of course an environment where you can install and have rights to install an Azure IR

Be aware that even ADLS, or ADF, or Synapse have really a cheap cost in DEV/TEST side. You will not be charged a lot during a PoC like me. I mean ADLS is very low on storage side, ADF cost mostly 5$ for my larger test and Synapse cost 5$ per TB of files on query SqlOnDemand so yes clearly you can go ahead !

After deployment on Azure ADF, you will have few pipelines like me :

You have the main one : SQLTableToADLS , this one will in fact retrieve all DATA from the parameter you have passed (TableNames + ADLS Link of your storage) - ForEach table it will query your Database of D365 F&O and push them as CSV files to your ADLS. The CreateManifest will after call an another pipeline that will generate all the .JSON format in CDM format. The last one CreateView will be again an another Azure Function that called your ADLS and see .json format on every subfolders generated and create automatically for you Views on Azure Synapse.

Very soon Azure Synapse will be able to connect directly also on Azure Data Lake in CDM format in order to generate without code the view. We will see how we can after extend this view on Azure Synapse and build your own Modern Datawarehouse.

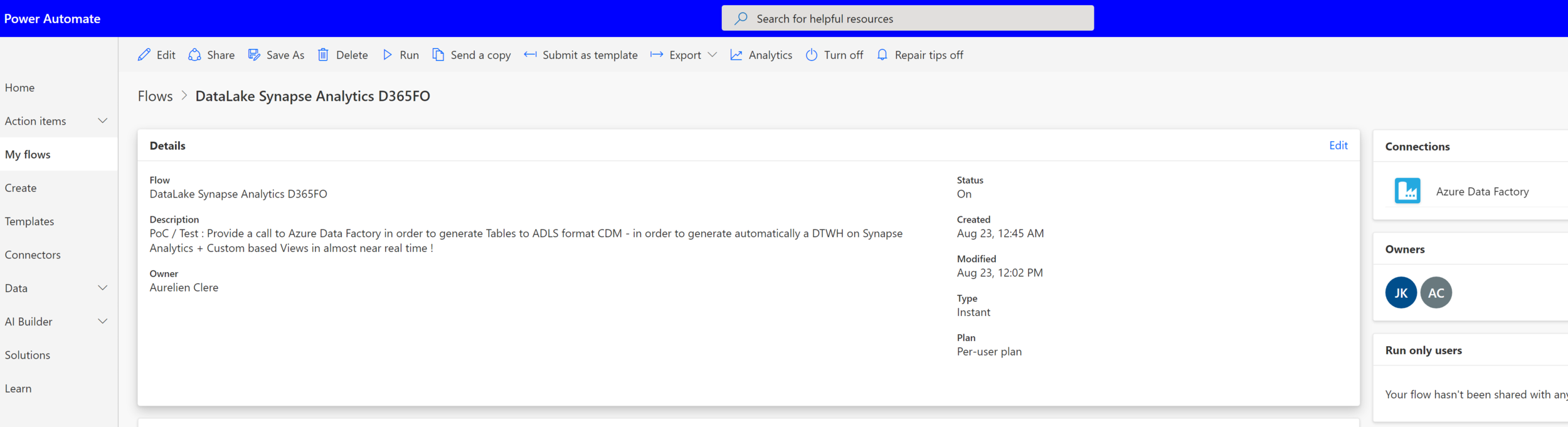

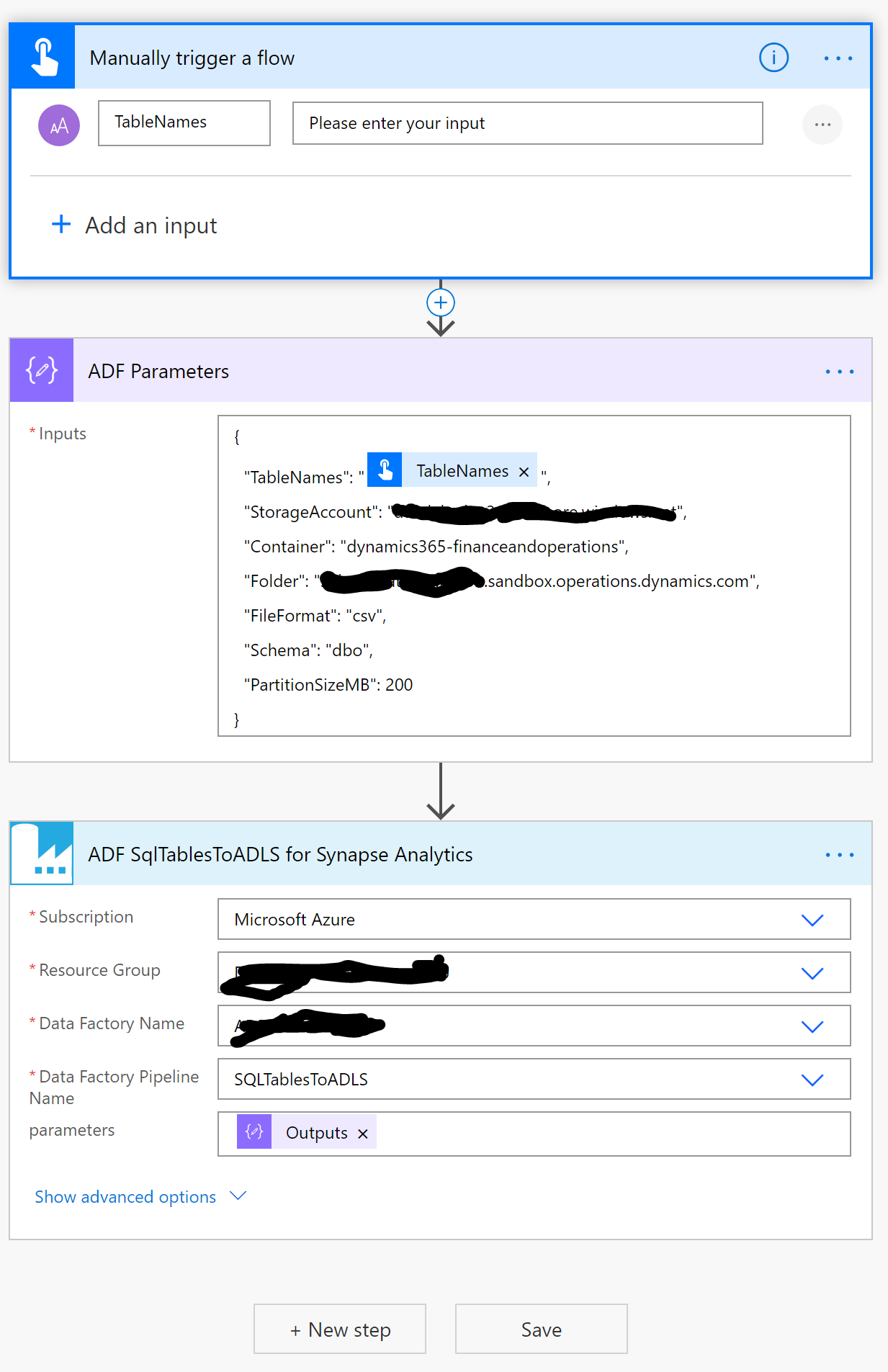

Since this is PoC you can also schedule the ADF pipeline but mostly it will be an OnDemand call. You can even call it with PowerAutomate on your smartphone like me :

(Again a good example to see that we can call everything with PowerAutomate =))

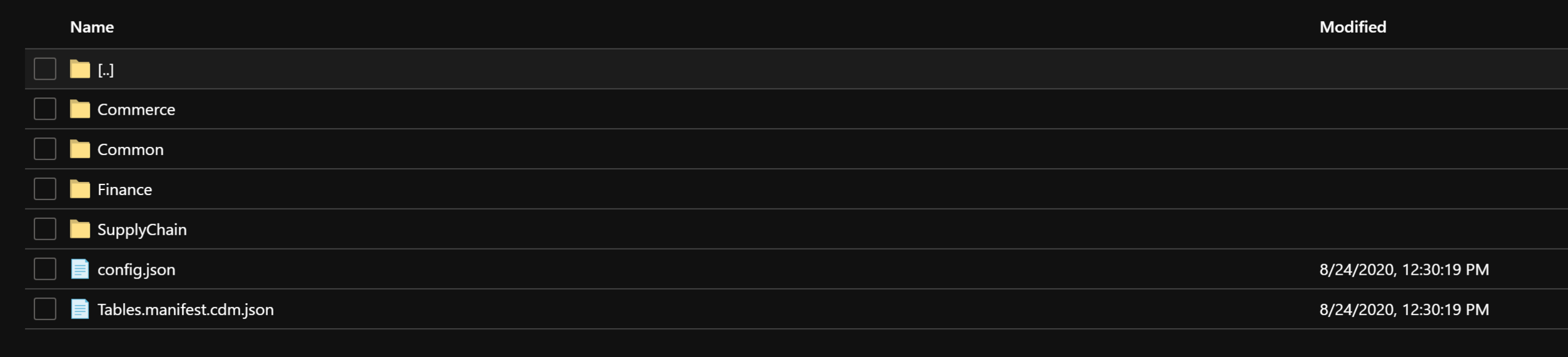

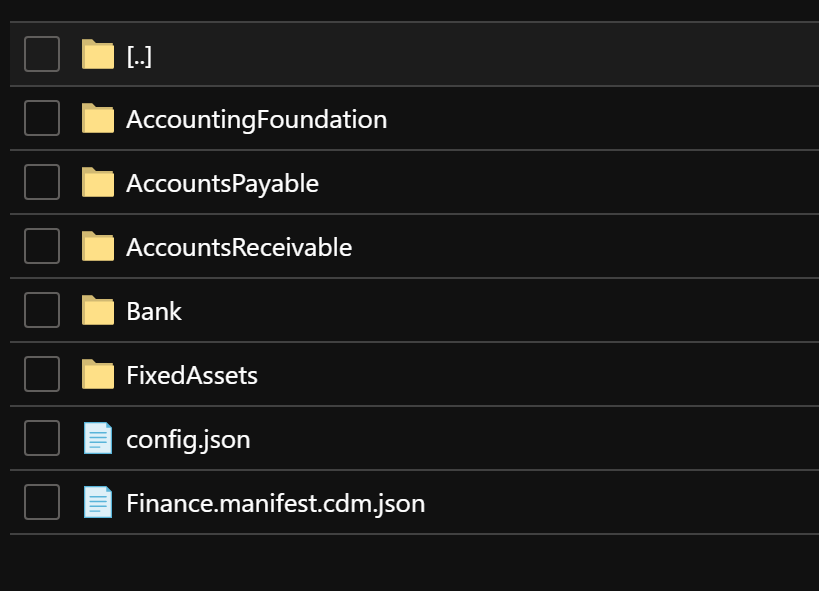

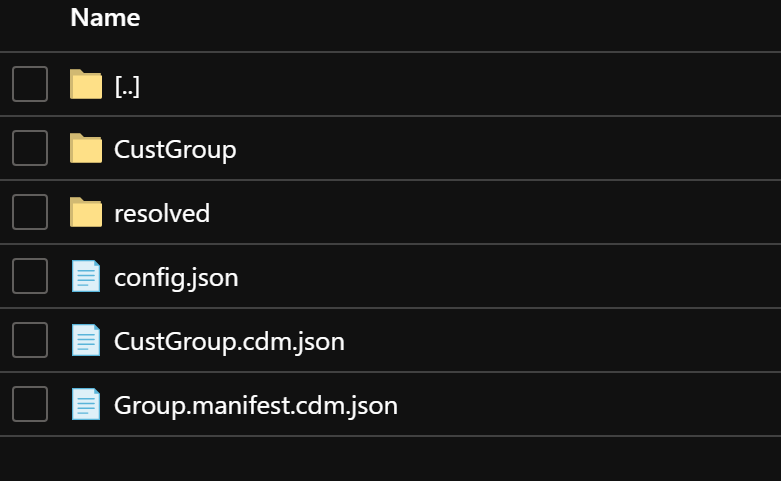

It will generate this “Tree View” folders architectures into your ADLS (it will be the same structure with the global features coming few months as described before)

As you can see we have all CDM.JSON generated as well as the CSV file with the DATA inside of the table.

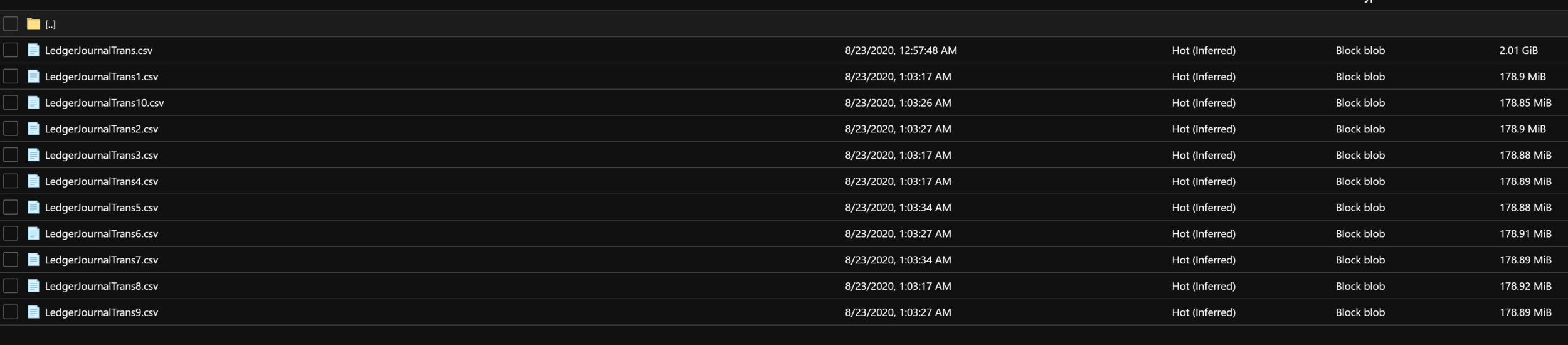

With 200MB file splitted in multiple files but Synapse will be able to combine in 1 global view of course ; like me for a TEST with LedgerJournalTrans :

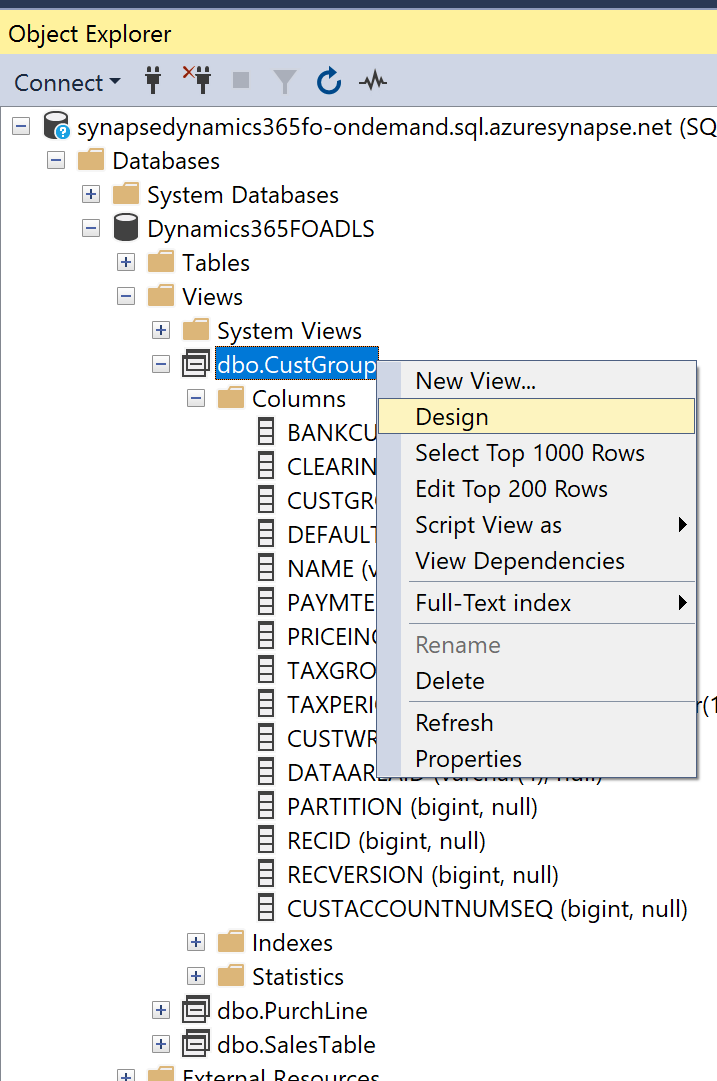

After this part has been generated into my ADLS, of course I have now also some views created on my Synapse Analytics Workspace where I have created an SQLOnDemand Datawarehouse. It’s an external datasource from ADLS and just a reference, no sync needed. Where you will query your database of Synapse (aka SQL DW before) you will call & retrieve your DataLake - somehow like a Virtual Entity in CDS. So no replication of DATA.

You will see it directly to your Synapse Analytics workspace. The portal is clearly the same as Azure Data Factory : good point :)

As you can see just below, I have now in real time a reference and the same structure & converted data type to my CSV file into my ADLS

So now we have seen a little Synapse let’s go further !

Azure Synapse Analytics is an unlimited information analysis service aimed at large companies that was presented as the evolution of Azure SQL Data Warehouse (SQL DW), bringing together business data storage and macro or Big Data analysis.

Source : Microsoft Documentation

There is no infrastructure to setup or clusters to maintain. It uses a pay-per-query model (you are only being charged per query for the data processed by the query – see pricing) and auto-scales depending on the amount of data being queried ! Like you have seen, Synapse is really the major part of this Modern Datawarehouse component in my architecture - because ADLS was the part of storage of DATA as CDM format ; but the BI Reporting part will be done in this Azure Component. It will be the main endpoint to connect for PowerBI as you will see.

Synapse Analytics give you as a single service for all workloads when processing, managing and serving data for immediate BI and data prediction. It’s well integrated with Power BI and Azure Machine Learning, due to Synapse's ability to integrate mathematical machine learning models using the ONNX format.

You can handle huge query amounts of information either on demand serverless for data exploration and ad hoc analysis, or with provisioned resources, at scale. So you will have the choice :) On a PoC analysis of course I pick the first one (SqlOnDemand Query)

As you can see below it will be your main DATA ecosystem and at center of your business architecture.

Source : A friend from FastTrack team

Like below you will use T-SQL Query with an OPENROWSET to your external datasource ; if you want to see in action ; go ahead on the Microsoft Documentation

Since a former MVP on DataPlatform and now a Microsoft FTE as provided a very very good article on Synapse I will also suggest to go read it !

Of course here we talk a lot of Dynamics 365 F&O since I’m working on it, but as you can imagine, we could easily add other DATA sources as external - like pushing & Ingest data coming from an Oracle Database with Azure Data factory (even onPremise) and after use it to cross data on Synapse. Of course it’s also a good way for Data scientist to analyze data before jumping maybe to Databricks to prepare notebook with Python for Azure Machine Learning based on this global Datawarehouse.

You can extend your model and the view generated of course and access it with SQL Server Management Studio like me :

Before going down to my last part about how-to use it after directly in PowerBI DirectQuery mode in Synapse, I also like these 2 videos. 1 is for a good overview of Synapse Analytics and also 1 is a focus on Analysis Services. If you go back on my article on the global Modern Datawarehouse architecture you have maybe see a SSAS component that can be in intermediate between Synapse and PowerBI.

The classic Microsoft on-prem architecture was SQL Server (DWH) or here AxDTWH for Dynamics + SQL Server Analysis Services. + Power BI. You could have copy-paste this architecture to Azure using Dynamics365 F&O / Azure Data Lake Storage / Azure SQL DWH aka Azure Synapse Analytics + Azure Analysis Services + Power BI. Also, the Power BI product group direction is to include the AAS capabilities as part of Power BI

Now let’s go to the last part : PowerBI ! Here we are ! :) Hope you have still enough coffee to read !

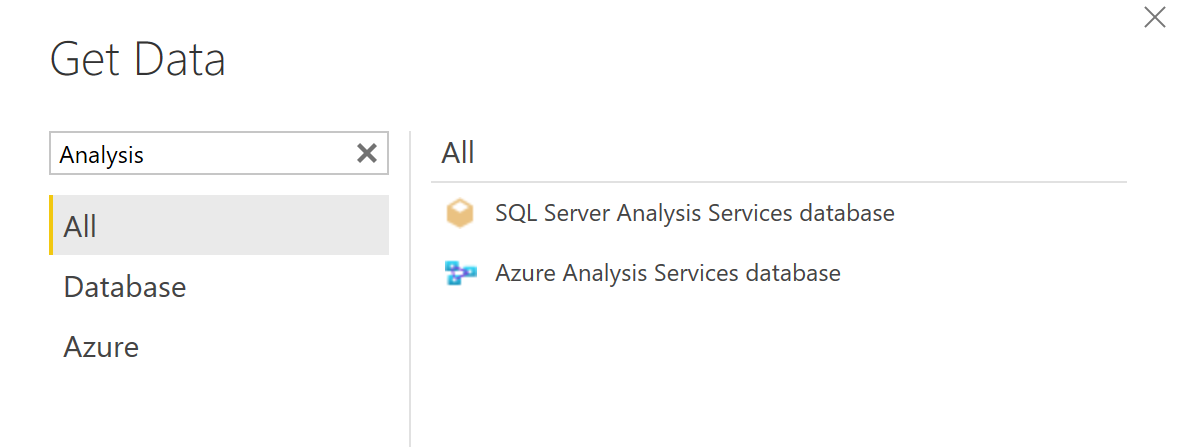

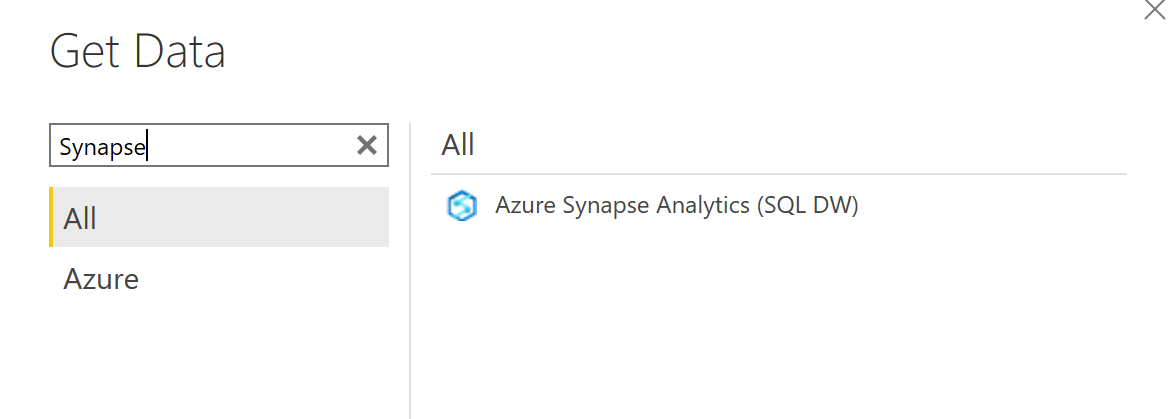

First step is to select AAS or Synapse : it depends if you have also added Analysis Services on top of Synapse or not.

On my side, I will connect directly to Synapse since it’s just a PoC and for some customers I think Synapse will be really enough to build a global Datawarehouse (at first)

Since we need to focus on real-time reporting, I will do a DirectQuery mode - and not import data mode. My DAX Queries in PowerBI will be converted to T-SQL statement in Synapse and I will be sure to see exactly as realtime the latest data coming from my ERP : Dynamics 365 F&O.

Remember, Dynamics365 F&O will push in realtime data into ADLS and my Synpase is just an external datasource (OpenRowSet) to ADLS, and in which I made some extension and building queries + views to propose an easy & comprehensive model for my users in PowerBI.

In DirectQuery mode, the main goal will be to focus on Display KPI and Insights ! Like with some aggregation and business measures. I don’t want to create a tablerow on PowerBI based on my Synapse. If I need an extraction like this, I can go directly with other components (like before with Azure Data Factory — ETL component to store the data to a sharepoint - and PowerAutomate can generate and ask this ADF pipeline and send an email for business users)

But also be aware, DirectQuery has some limitation - so read it before going to build your report.

With SQLOnDemand Serverless Synapse, I had an issue to go also on Import Data Mode - assuming you will have to be on a provision dedicated mode in Synapse if you really want to go in import data mode. But, you will have still to schedule 8 refresh max per day with this kind of mode (PowerBI PRO licence and not the Premium one) => so users will not be able to see in fact the main asked / most wanted feature that I’ve seen for Dynamics 365 projects : « I want to be sure when I see my data in PowerBI that this is the most recent updated one »

So if you have put all this kind of effort to build a global end-to-end modern datawarehouse architecture where you are mostly in near real-time everywhere all the way, but in the last step you are not, that’s kind of disappointing, right ? That’s my opinion :)

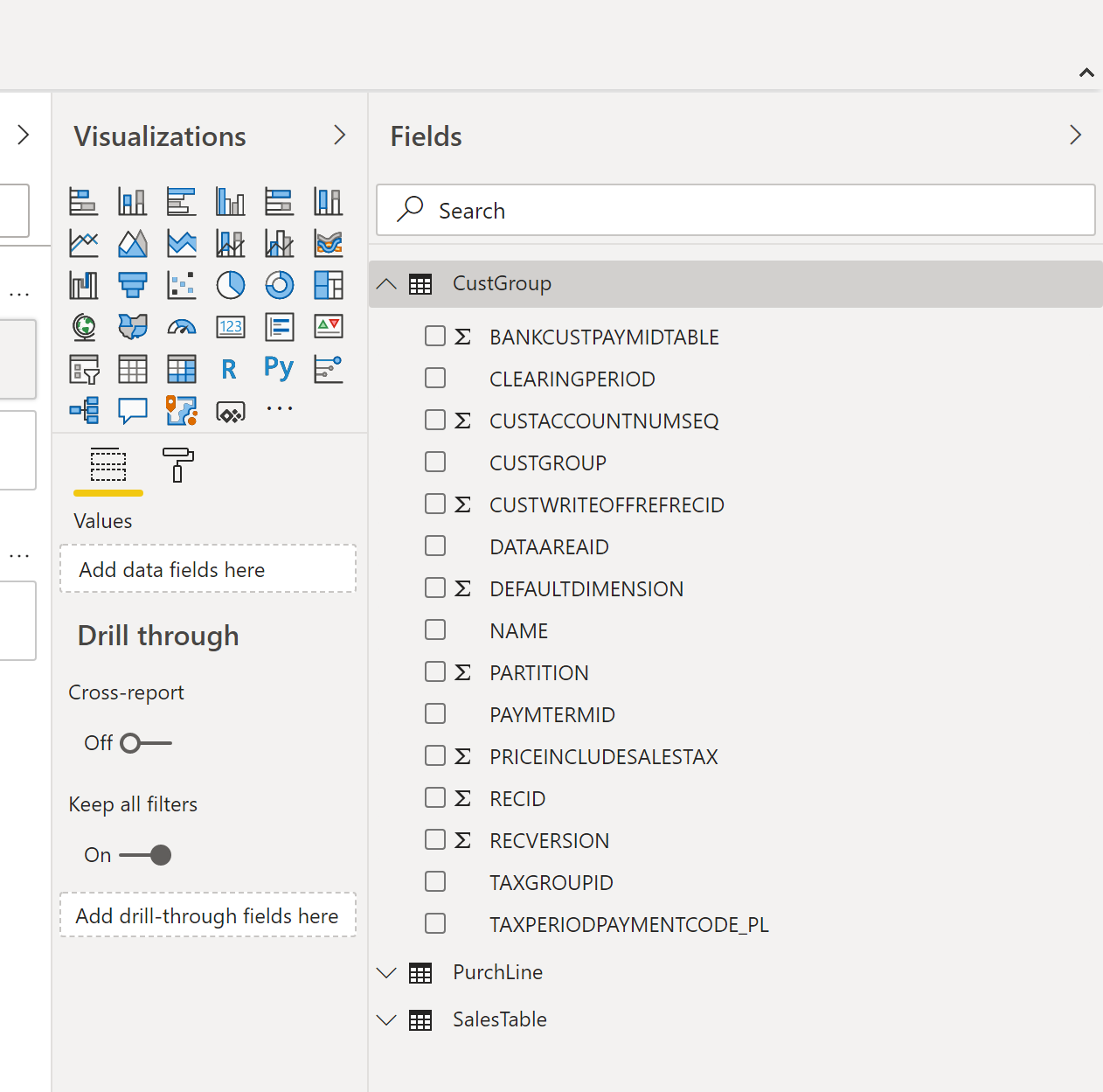

After I can of course see my view on PowerBI ; same as on Synapse. This is will be the main part of the IT Team here : focus on building queries and views on Synapse to propose an easy model for them / so not clearly like me below where I just have some tables :

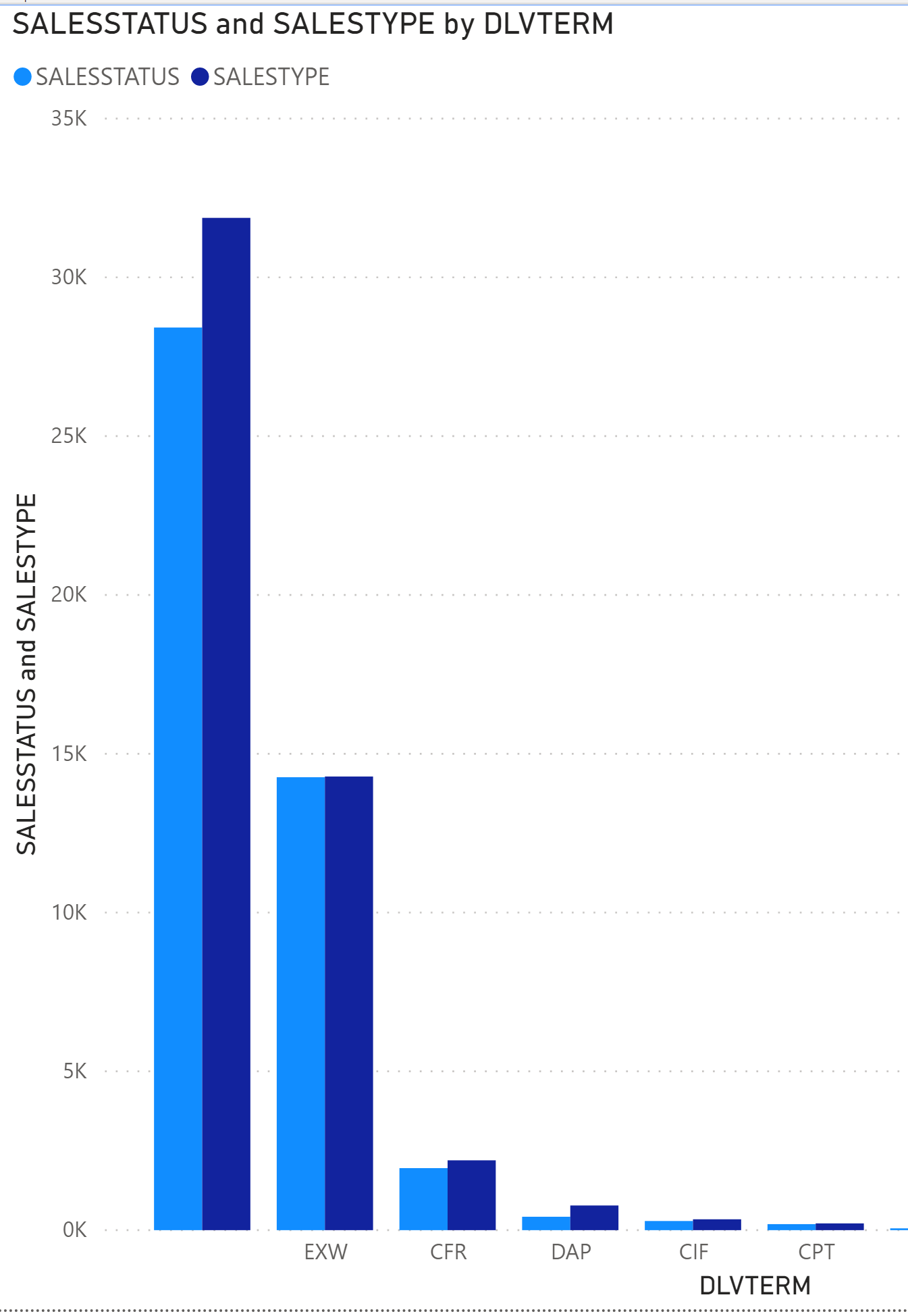

And of course starting to focus on Visualization where users will see the latest data coming from my ERP instance :

Hope you have a good understanding on how to build now a global Datawarehouse from Dynamics 365 F&O in order to let users build their own reports.

Don’t hesitate to forward, comment, feedbacks, questions !

Here is also my DEMO Youtube Video to show directly how it works !

Take care and see you next time !