LCS API Database Movement with PowerAutomate

PLEASE REVIEW my latest article (more up-to-date) in August 2022 - I made a brand new complete solution !

Hello Dynamics & Power Community,

I’m very happy to announce a good news for you the Dynamics365 F&O Community. I’ve got a little gift for you, as part of the new LCS API.

If you were, like me as an AX 2012 or F&O Technical/Architect Consultant, you probably already suffered from not being able to offer customers the ability to automatically refresh your database from one environment to other. Of course, you could do it via scripts, powershell (but really complicated for LCS and F&O : see even impossible), but finally doing it by code actually…. and here I will show you the benefit of the PowerAutomate / Flow to refresh automatically your F&O production instance to an UAT instance, without any line of code !

I will provide you, the How-To setup here in this article (step-by-step), also 3 samples of my GitHub account for PowerAutomate flow.

Hope you will like it, and feel free to share it :)

So…. how to start :)

First of all, here is a quick summary of the new API for LCS - in Dynamics 365 F&O.

Like you know, we were not able to perform automatic Database movement in the past. We get only to an Azure Marketplace a flow between Azure DevOps release pipeline in order to upload your BUILD package and also setup an automatic deployment. It was the very beginning to perform great automatic release pipelines : like me, deploying every night for customers a recent package in our TEST environments every night after BUILD completed.

Database movement operations are a suite of self-service actions that can be used as part of Data Application Lifecycle Management (also referred to as DataALM). These actions provide structured processes for common implementation scenarios such as golden configuration promotion, debugging/diagnostics, destructive testing, and general refresh for training purposes.

In this topic, you will learn how to use database movement operations to perform refresh, export, import, and various flavors of point-in-time restore.

At the time, I write this article, the LCS API have only 4 methods but I hope Microsoft will add soon more methods and of course I will share that with you depending on which and when it will be possible.

We can perform the following operations through the RESTful API:

List DB backups for an LCS project.

Create a DB refresh between two environments (only Prod and Sandbox environments, like we can do in LCS).

Get an ongoing operation status.

Quick feedbacks for Microsoft : we really need to add also monitoring KPI stuff methods in order to get the DTU SQL Percentage Live for production or other stuff like that, in order to not connect every time in the LCS Portal and check if everything looks good : to be proactive in fact / and maybe also restart service etc… : but yep I maybe ask a lot, it’s a good start now that this API is open, so I know that Microsoft will bring this API even better this year !

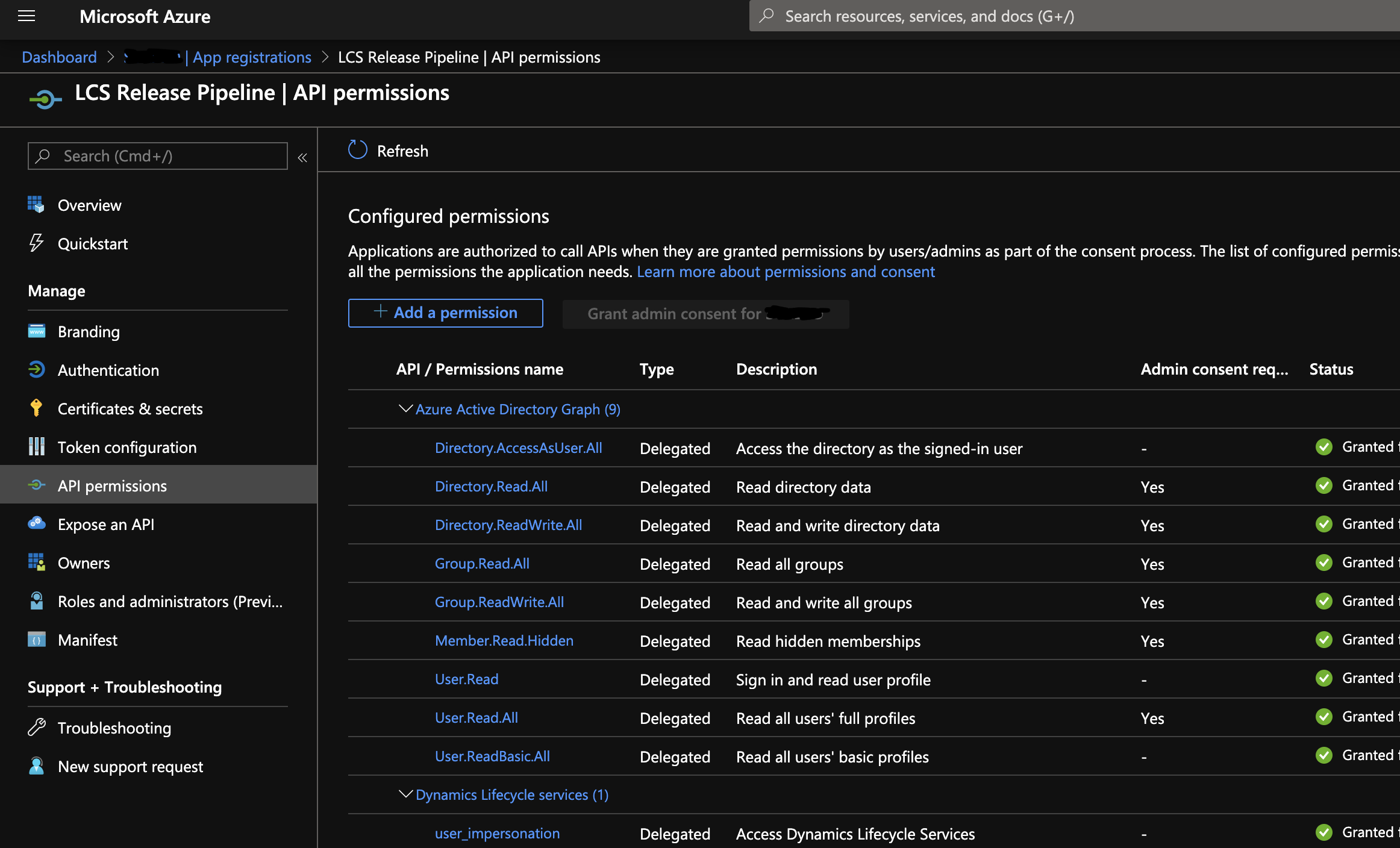

First, you will need to create an Azure AAD in order to connect to the LCS API.

You can go here for the How-To setup :

Don’t forget to copy somewhere your ClientID : ApplicationID and also to generate a ClientSecret. Also don’t forget to put the Dynamics Lifecycle Services like below as a Delegated access, with Grant Admin permission.

So, now you have the access to call the LCS API, and you can authenticate to it. But maybe, like me, if you had in the past configure the AzureDevOps release pipeline process to connect to LCS, you had maybe already an App Registration in your Azure AD, so you can keep it and reuse for this purpose.

Just for your information, It was not possible to create directly for you Custom Connectors in PowerAutomate in order to deploy it more easily. Since the LCS API use at the moment a strange and old school authentification. Hope I can share that when OAuth 2.0 will be used for this API !). But these samples will be enough and easy to configure, trust me !

Now, you can get my PowerAutomate / Flow samples directly in my Github Account :

Download all the 3 ZipFiles

After, you can go to your PowerAutomate account. I know that partners/customers in FinOps are not (yet ?) going very much in there, but still, I hope I will help you to discover this fantastic feature in the PowerPlatform ! And yes, normally (like me), if you had bought your F&O licences, you have already a free account for every users, and it will be more than enough to setup some flows like this one.

Go, to “My Flows” in order to import it.

Select my ZIP file from my Github,

Here, don’t be afraid for the 2 resources / connectors (Mail & Push mobile notification) you can setup it before importing or just map to your connectors if you had already setup for other flows before. I just used them in order to give you some example of actions, you can delete them after import process.

When you have done all done 3 imports of the sample, let’s go in 1 by 1 if you don’t mind !

PowerAutomate Sample - LCS API - Automatic refresh database

If you go in, I will explain step-by-step in order to configure it and also give inputs why I’ve done that :)

Click on “EDIT” button.

Here is below the whole flow, without any line of code :)

So, first step, since it’s a scheduled flow in my example, I can setup when my flow will run. Here I have put every weedays at 7 p.m but sure you can edit it of course. Keep in mind, that you can of course do it by a trigger from other connector in PowerAutomate. Like maybe do it after an Azure DevOps Pipeline is finished or after a BUILD process, after also an approval by someone else in Microsoft Teams in adaptive card, by a click in a button in a PowerApps : well, I can give 150 examples so you can setup like you want !

Just be careful also on the interval, you can setup only 3 refresh in a day for 1 target environment ! (limitation by LCS)

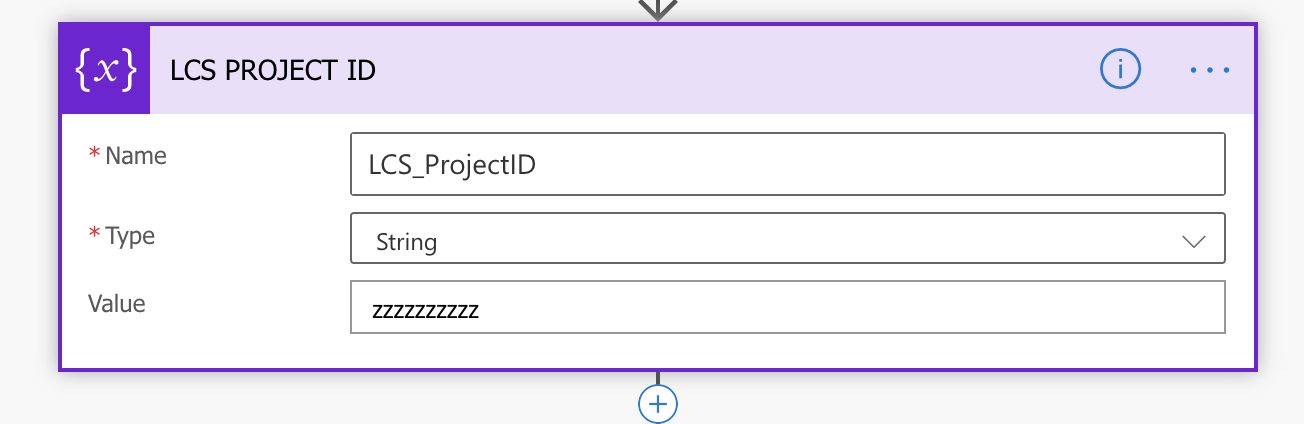

After I just create a variable to put your LCS project ID. Of course, change the value in it to reflect your current LCS project.

You can grab it directly in your LCS portal if you don’t know it

After, I use the HTTP connector to perform the authentification with of course your Azure Application ID and Secret token that you have created earlier.

You will need here only to change the values of : username, password, clientid and clientsecret.

Keep the resource and grant_type like this, as it is. Don’t change the Content-Type of the header.

One more thing : don’t use an LCS account that of course doesn’t have access and have appropriate security role. And like me, don’t use an account that has a MFA account (Multi Factor Authentification), use like an administrator/service account.

After I just use the Parse JSON feature, in order to get the object of the response. The most important thing is the property : Access_Token

It will be used after for every call to the LCS API, in the header, you will see :)

Keep in mind that the Token is for 1 hour of expiration !! So don’t forget to refresh your call to authenticate after 1 hour if you need to !

After I have created 2 more variables : the source & target environment of your LCS environments that you want to refresh database. Of course, change by your need !

As a reminder, you can get the Environment ID by going in more details of LCS.

Finally I call the LCS API to refresh the database, like this :

Here you don’t have to change anything, good right ? :)

But, I want to explain the Authorization header. It’s here that we put the Access_Token of the response after authentification.

It’s like : Bearer xxxxxxxxxxxxxxxxxveryhugemassivetoken

The space key is mandatory, so don’t forget it !

Of course at the end, I add it also a Parse JSON to build the object response of the API.

After, I added a conditional split. Of course, the purpose is to achieve a different path in the Flow, if the response is OK or not…

In my “not success path”, I just put a Push Mobile Notification Action, but it’s just an example of course. You can change it by whatever you like. Don’t forget you have now more than 300 connectors in PowerAutomate (Microsoft and other third-party apps !)

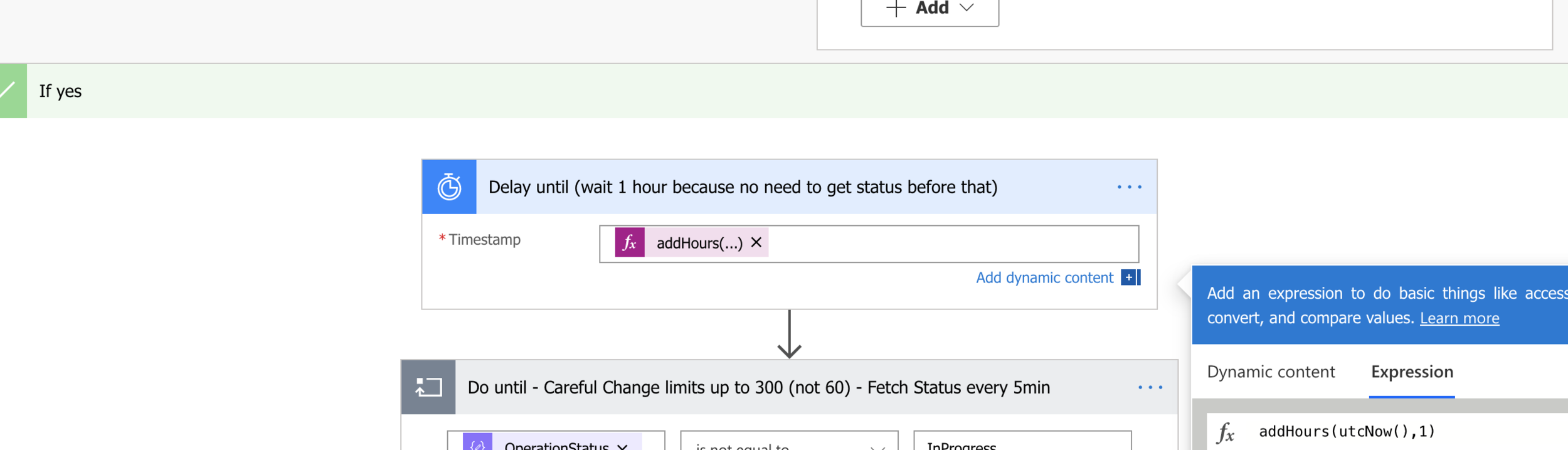

On my “success path”, here is for me the most important feature !

First step here, I wait for 1 hour, magic no ? but why ? Well because, we don’t have to call LCS API to fetch the status of the current activity of the database refresh until more than 1 hour. Like me, it takes 1hour30min and sometimes maybe 2 hours to finish all the refresh. But you change it of course by adding just 30 minutes of wait if you had experience faster refresh on your side ! It’s also for me a good example to show you that we can put Expression without to be a massive developper !

After I’ve done a Do Until feature, in order to call every 5 minutes the LCS API to fetch the status. In order to after doing some actions in the same flow.

The end of my “while'“ loop is until we’ve got a Status Operation than is not equal to : In Progress. Just be careful of the Change Limits here, you have to increase it to 300 like me, because by default it’s 60 : that’s say that we will only do this part for 60 minutes. But I already do that for you :)

Update (17/04/20) : Just found that you can also increase the timeout of the Do Until statement. By default it’s PT1H , you can change by PT2H for 2 hours or more if you need.

After, I just fetch the status of the Operation/Activity of the database refresh to know if it’s finished :) Parsing JSON again of course, every time in fact so I hope you are now familiar with that.

And now, when it’s ended, why I’ve done that ? Well because on my side (and I think you also), you have some “Post-Refresh” statement - script or SQL script to achieve. As you know, after a refresh database, you have all BatchJobs On Hold, only 1 user access activated, and you want maybe to change some parameter to reflect the change and of course don’t activate the Email distributor batch to sent email to a customer from an UAT Instance… believe me I’ve seen that many times !

So that’s why I’ve put this last step here and will explain more deeper with just a small part of X++ code for AX Technical guys.

In fact, it was not possible for me to give you a sample of the Dyn365 F&O connector to share it (without giving my credentials) directly in this sample, so you will need to do it yourself for this part.

So, of course you will erase this part of sending email after completion, it’s irrelevant. You already receive an email from LCS when the refresh is done.

First, you can of course add other actions if you want to, like I’ve said before you have 300 connectors so you can put something else.

But for me, you will change by that !! The Dynamics 365 FinOps connector in order to call Action / in fact it’s like a job in AX. It refers to the methods applied to a data entity.

Create a new step like that by choosing the right connector. You will notice that you have multiple actions. Here you can see that for other Flow/PowerAutomate you can achieve more : by creating, updating, retrieving records etc…. so for other use cases, you can have a look !

Here, I will pick “Execute Action” :

But, like me, my question was : “What the hell are coming from these actions ???”

See the documentation of the connector F&O :

Like I said, it’s coming from Methods in the Data Entities in F&O. So like I’ve done below, you can create a custom one, and create method that in fact will do of course your post-refresh database script. Like me, it was done for reactivate all users, batchjobs. But sure, you can do it depending on your needs !

Just as a reminder, don’t forget to use the connector F&O with the Environment Manager that have access after a database refresh !

So just create a new Data entity, that refer to whatever table coming from AX like that :

Create your methods, that refer to SysODataActionAttribute.

Here I don’t give a response (void) but you can of course also put some object if you need to get back to PowerAutomate flow.

Here we are, finished for this first sample.

2. PowerAutomate Sample - LCS API - Export database

You already know that we can export database also on the LCS Portal by manual action on a Sandbox instance. Of course, it can be good to do it like just after our previous sample because we have done a flow (DataALM) to refresh the production to an UAT instance. If you want to get the .bacpac (backup of SQL Azure) in order to deploy it (implied your production database) to a DEV environment. Maybe also because you want to archive it to a safe repository. So yes, the LCS API has already a method for that, that’s why I made this sample for you.

I assume that you have already download it from my GitHub Account before and already import it like the previous one.

Go in this flow.

I will not explain again this part, because it’s exactly the same as the Database Refresh.

After, I made 2 variables : the backupName wanted and also the environmentId that you want to export the database.

After, I call the LCS API and grab the response to a JSON object.

Finally the conditional split, you can change like before by whatever you want.

3. PowerAutomate Sample - LCS API - Retrieve list of database backup

Here, we will have the possibility to retrieve the list of the whole backup list in your LCS Asset Library. As you know, it’s the default storage, where all database backup are coming.

At the end I’ve done a For Each feature, good way to show you this point !

Well… sad news : true. Because I try to add also an another HTTP Connector for the FileLocation (it’s the HTTP location where you can download your backup) but the limitation is for 100MB so you will get like me an error. My aim was after to grab the fileContent to a OneDrive, Google Drive, Sharepoint or Azure Blob Storage, but yes… at this time we can’t.

The only way that I have for you, is to do it by a more complicated way. You can create a powershell script in your own network / share folder (onPremise). You can after download the Data Gateway for PowerAutomate in order to call your script, passing through the FileLocation and your Powershell will download it on your onPremise folder. Yes I didn’t talk about that, but yes we can achieve a connection from PowerAutomate (SaaS - Cloud Only) to connect on an onPremise API - Service.

To conclude : I hope you like it :) Besides that, feel free to contact me, comment, do feedbacks etc…

You can see easily that PowerAutomate in part of the PowerPlatform is really a huge feature that will help us in a lot of flows for F&O project.

Because despite this technical part of DataALM, like I’ve done for other articles in this blog, we can achieve a lot more of use cases now ! Business cases , Business Events etc…

See you ! Stay tuned. Add me on social networks :)