Dynamics 365 Finance and Operations Reporting : DataLake and PowerBI Dataflow

Hello the Dynamics365 F&O Community,

As part of new events, sessions or webinars, I told my self that it will be very great to do a new article about a new type of architecture for Reporting purpose of your loved ERP : Microsoft Dynamics 365 Finance and Operations. As of now, I always seen couple of architecture : some strange one, some unoptimized (most of them), some with a global amount of tasks for IT stratospheric compared with the need of Business Users to analyze their KPI (like : Purchase Analyze, Sales Analyze, Inventory, Budget etc…)

This article is more an extension of my previous one that I already made couple of months before. If you want to read it, I really suggest to because it was really a focus on Azure Data Lake that will part of this new Architecture for Reporting.

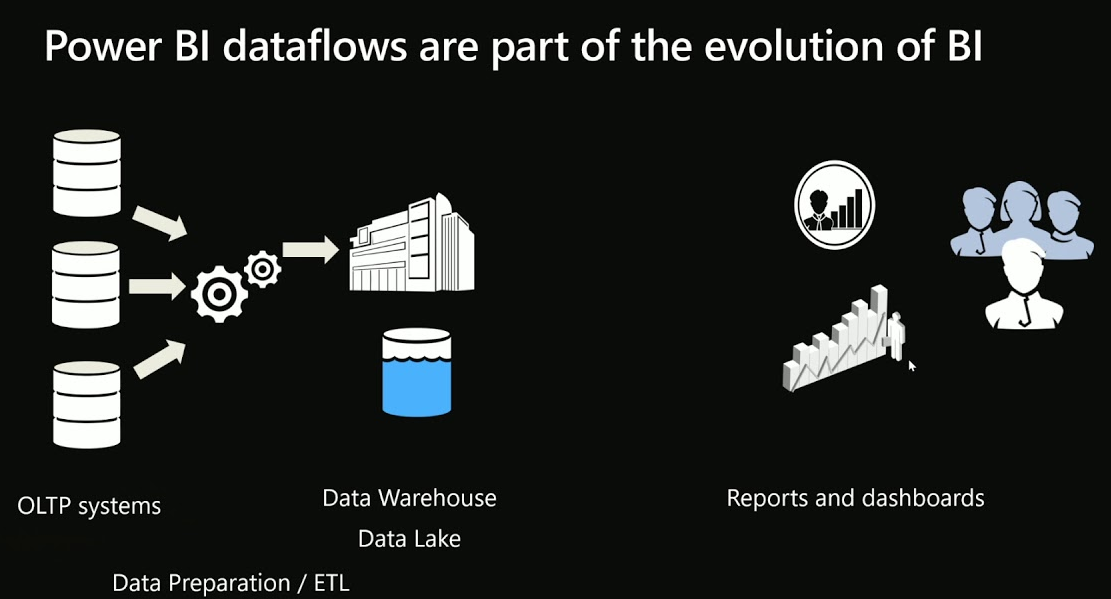

Before jumping to the How-To step-by-step process to provision this new of type Architecture, let’s introduce the 2 components : Azure Data Lake (ADLS) and PowerBI Dataflow.

For Reporting, in Dynamics 365 F&O you can now store your Datawarehouse in your own DataLake - As I said before, I had already explained how to use it, how to configure & store all your Cubes in an ADLS in my previous article. This is the first important step mandatory in order to use after PowerBI Dataflow ! it’s really important to go through this new type of storage. You can also use it for PowerPlatform via CDS Export Data Lake feature. You can also use for Machine Learning (you will see that for coming Insights / AI Feature of D365 F&O, you will have to use also an ADLS) : so clearly I suggest to start to have a look on it : because the Data Lake will be the MAIN central repository of all your DATA : combined with previous old legacy system, as well as Dynamics 365 Apps.

On my side, I also see a big advantage of it for D365 F&O : is to have access in your own Azure subscription. If you are like me on a Cloud Online Strategy, you don’t have access in production. Also, the tables & data entities (BYOD) will be replaced from an SQL Azure Sync Purpose to Sync it directly to an ADLS. Much faster sync, less cost !

Despite the fact to store all your Cubes / EntityStore to an ADLS, you can of course extend it by development (AggregateMeasurements) and deploy new Cubes, even of course your custom fields if you want to have it after in PowerBI. This development is clearly fast and not complicated. I always also recommend to use EntityStore for BI Reporting in D365 F&O and not Data Entities. All your cubes in ADLS will be stored & compliant as a Common Data Model folders.

Why ? While the Data Entities will become shortly in ADLS too, it’s normally dedicated for API REST (integration) and Data Management Framework (DMF) and not for BI ! EntityStore / DTWH of D365 F&O is clearly a database for that, it’s dedicated for that and only for that. So go for it ! I have seen a project when someone made PowerBI Reports with Data Entities and ask them to refresh automatically and get back all DATA in PowerBI all night long getting back more than 10 millions of lines by calling the API of Production… not so great…

The other component that I had already presented a little bit for my previous article, it’s PowerBI Dataflow.

Power BI introduces dataflows to help organizations unify data from disparate sources and prepare it for modeling. Analysts can easily create dataflows, using familiar, self-service tools. Dataflows are used to ingest, transform, integrate, and enrich big data by defining data source connections, ETL logic, refresh schedules, and more. In addition, the new model-driven calculation engine that's part of dataflows makes the process of data preparation more manageable, more deterministic, and less cumbersome for data analysts and report creators alike. Similar to how spreadsheets handle recalculations for all affected formulas, dataflows manage changes for an entity or data element on your behalf, automating updates, and alleviating what used to be tedious and time consuming logic checks for even a basic data refresh. With dataflows, tasks that once required data scientists to oversee (and many hours or days to complete) can now be handled with a few clicks by analysts and report creators.

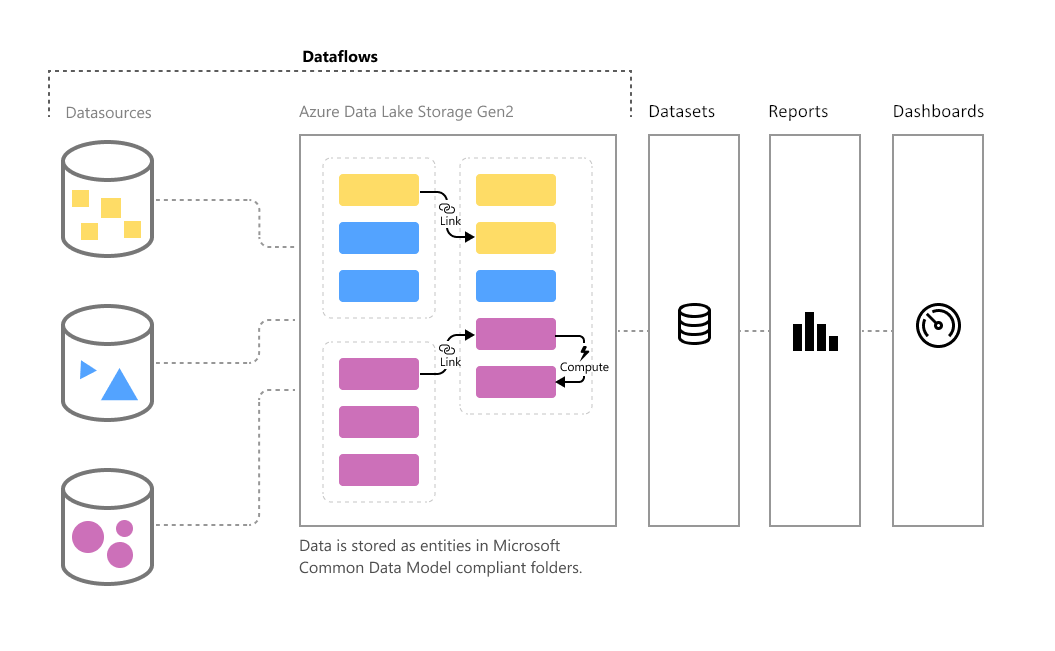

Data is stored as entities in the Common Data Model in Azure Data Lake Storage Gen2. Dataflows are created and managed in workspaces by using the Power BI service.

Dataflows are designed to use the Common Data Model, a standardized, modular, extensible collection of data schemas published by Microsoft that are designed to make it easier for you to build, use, and analyze data. With this model, you can go from data sources to Power BI dashboards with nearly zero friction.

You can use dataflows to ingest data from a large and growing set of supported on-premises and cloud- based data sources including Dynamics 365, Salesforce, Azure SQL Database, Excel, SharePoint, and more.

You can then map data to standard entities in the Common Data Model, modify and extend existing entities, and create custom entities. Advanced users can create fully customized dataflows, using a self-service, low- code/no-code, built-in Power Query authoring experience, similar to the Power Query experience that millions of Power BI Desktop and Excel users already know.

Once you’ve created a dataflow, you can use Power BI Desktop and the Power BI service to create datasets, reports, dashboards, and apps that leverage the power of the Common Data Model to drive deep insights into your business activities.

Dataflow refresh scheduling is managed directly from the workspace in which your dataflow was created, just like your datasets.

Uses For Dataflows

The immediate use that comes to mind for me is the ability to standardise access to source data. Up until now, when I create a new Power BI Desktop file, I have always connected back to the original data source and loaded the data I needed for the new PBIX file. Often the data needed to be transformed, so I performed some transformation steps in Power Query before loading. Then, later on, when I needed to create a new workbook, I had to either copy the Power Query steps or re-write the steps again from scratch. Each person in the same organisation with the same need for the same data had to do this too hence multiplying the rework. You can imagine that there is the potential to have many versions of the same thing. With dataflows all that changes. One person can create a single dataflow and make it available via an App Workspace. Anyone with access to that App Workspace can then directly access the table of data directly from that dataflow (demo to follow).

Other benefits include:

Effectively creating an online centralised data mart/data warehouse for storage of data in a format that is better suited to reporting and analytics than the source data.

As new dataflow connectors become available, it will be easier than ever to connect to important data such as “customers” from services such as MS Dynamics 365. I have tried to do this myself in the past and found it far to complex for the average business user. This is all about to change.

Dataflows have incremental refresh (currently just for premium) meaning that large data refreshes that currently can take a very long time to complete can be configured to refresh just the changes.

Now let’s make more examples and DEMO directly how it works for Dynamics 365 F&O for Reporting - New type for Architecture with ADLS and PowerBI Dataflow

So first part as I said, is to have all your Entity Store of FinOps in an ADLS. You can select here all Aggregate Measurements that you want to be synced after in your ADLS. Select Automatic Refresh Enabled for that. Just keep in mind also that Microsoft has already done some PowerBI Reports in standard, all of them was part and connected to this EntityStore / DTWH and not the Data Entities :)

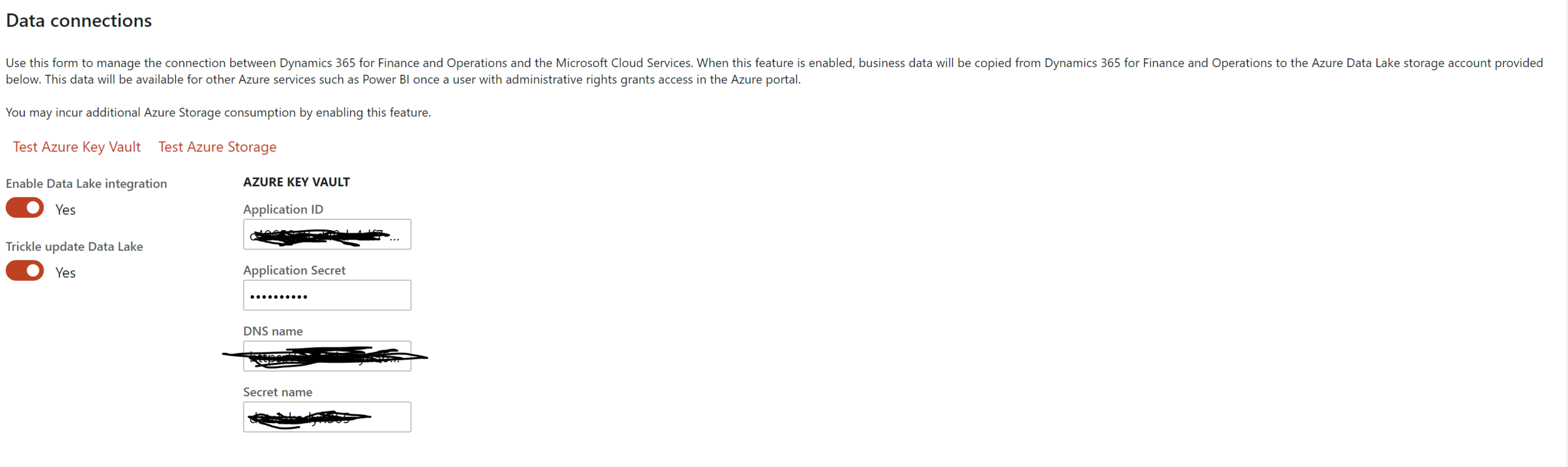

To have all your cubes in the ADLS, you will have before to do the configuration in System Parameters form. But you can find all How-To configuration in the Chapters 3 and 4 of my previous article.

It seems also that in coming months, this will be part as an Add-in in LCS environment and that we can do the configuration directly in LCS to setup the ADLS sync.

Last step on Dynamics365 F&O part, is to be sure that these 2 batchs jobs are running well :

Full Reset (Has to be launched one time for the Initial Sync in ADLS - could be also used to run every night)

Incremental update (part of Trickle update Data Lake during business hours to sync in more real-time DATA to ADLS like it can be every hour)

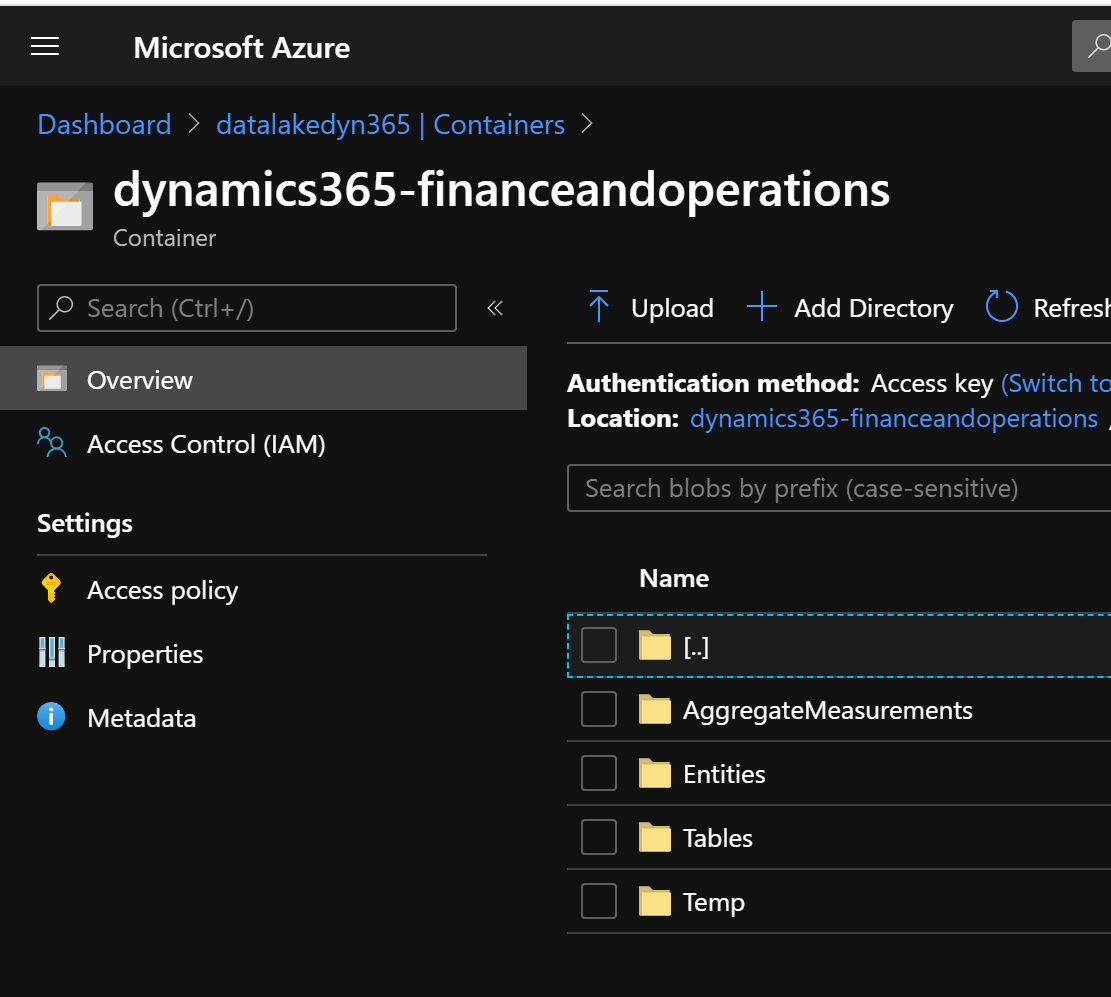

Now if I go to my Azure Subscription, you will have your EntityStore / Aggregate Measurements / DTWH synced in your ADLS.

You can already see as part of a public Preview that we can have now also Data Entities and Tables in this same ADLS :

Now you have your CDM folders (model.json file at every folders / AggrehateMeasurements) with CSV files in it, Don’t connect or use directly the CSV files in PowerBI Desktop, otherwise use the model.json file in PowerBI Desktop because it will have the name of columns and the hierarchies of the sub-folders. But, as I said on my side, I really suggest to go directly in the next step : PowerBI Dataflow.

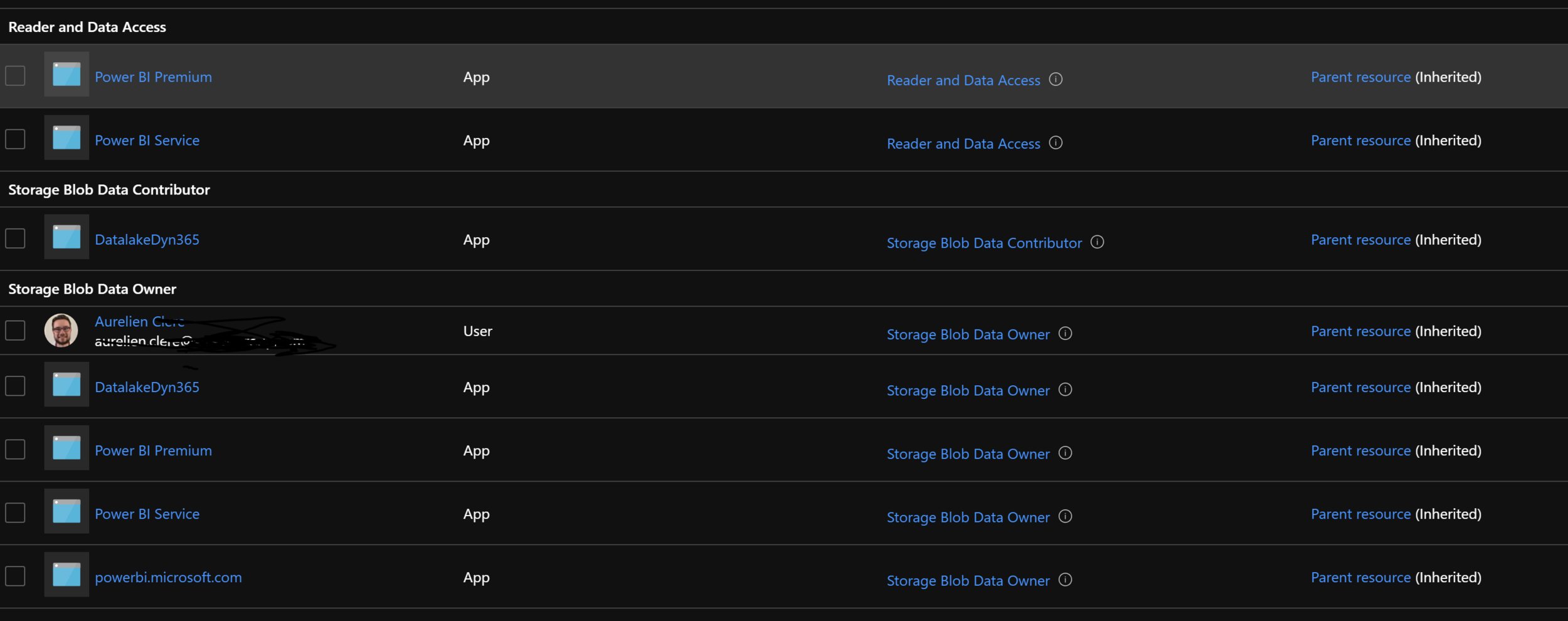

Since we are already on the ADLS part, we will have to configure the IAM (Access Control) to setup the PowerBI Dataflow capability.

In order to achieve that, you will need to add the 2 App : Power BI Service and Power BI Premium as Storage Blob Data Owner, and Reader Data Access

Now go directly in PowerBI.com ; and in Admin Portal mode.

Now you will have to add the configuration of your central and main ADLS in your company.

Be aware, PowerBI can accept only one ADLS. But, ADLS is global : by environments and by data sources : so it’s not a problem.

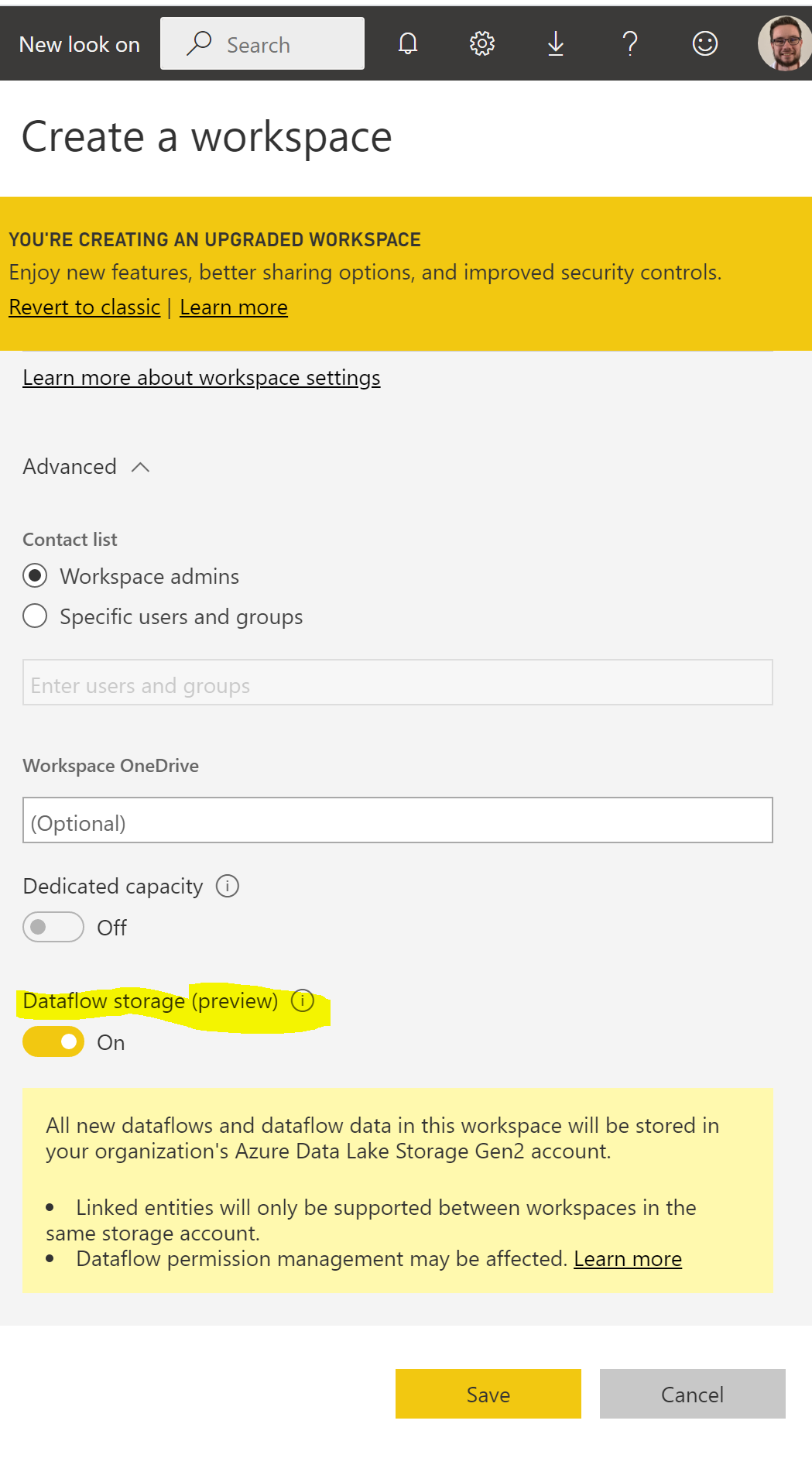

Now your PowerBI tenant is linked correctly to your ADLS where you have all your Entities Store ; we can now create a specific global Workspace where we will store all the PowerBI dataflows. Don’t forget to activate the Dataflow storage before creating it.

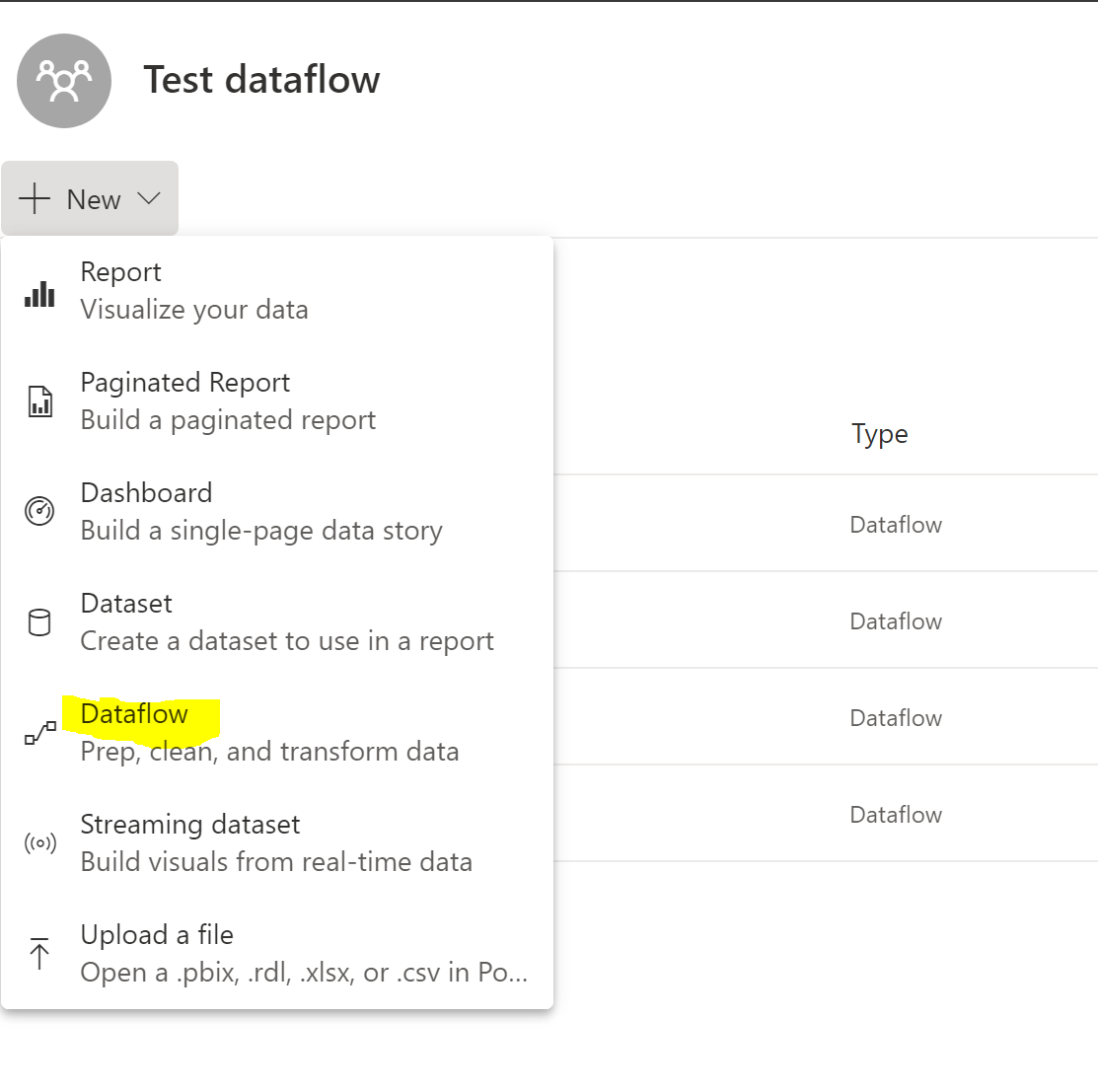

Now you can go to this new Workspace.

Let’s create a new one for example and DEMO.

As I said before at beginning of this article, the aim / goal of Dataflow will be to create as many as possible all standard global Dataflow for your company , as part of all the AggregateMeasurement. The IT team can create them, do the preparation, cleaning, relationship and just after publish the main one for business users so that they will have just one simple query with not technical fields names, without to do on their own the relationship and so on !

For me it’s always complicated to explain the Model of Dynamics AX for so many years to a business users. So we want to faciliate the DATA sources part in PowerBI and let them only do visualization!

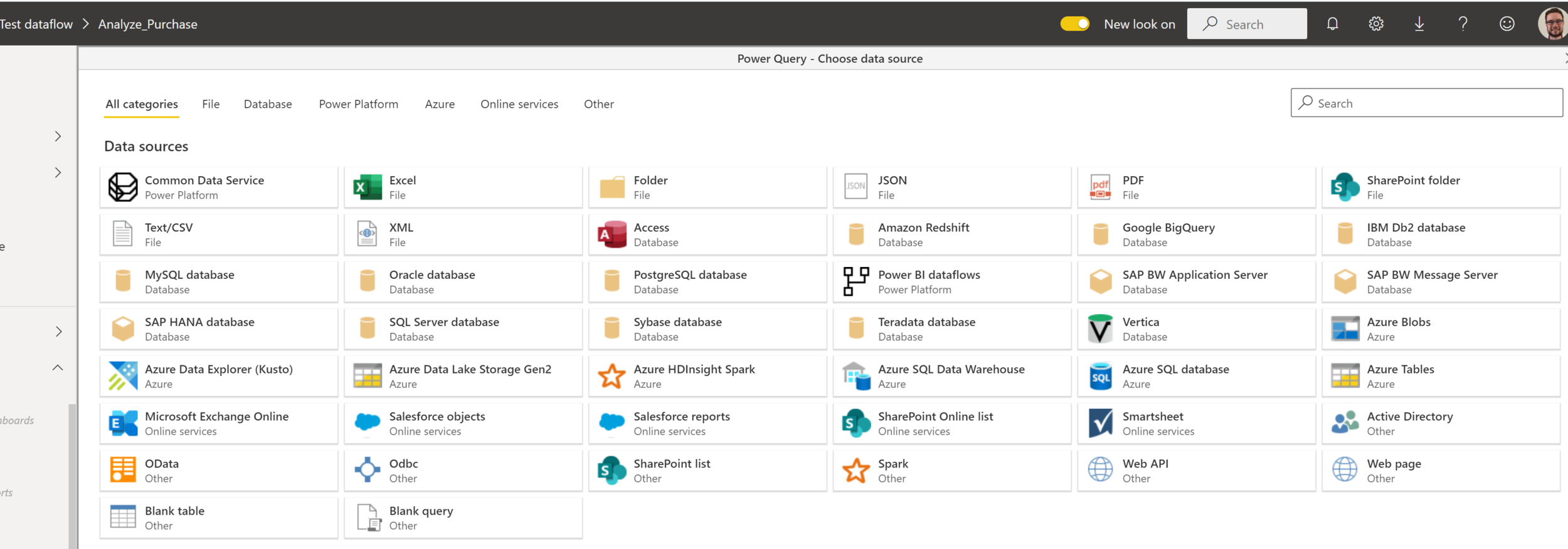

You can see here that we can create Entities in Dataflow linked to our ADLS storage, or even Attach directly to the CDM folder. We can also do several Dataflows and link other entities.

So as a best practice, to begin, I Suggest to do a 1 dataflow for every Cube / AggregateMeasurement with Attach Common Data model Folder

After that create new one, that will do the relationship, cleaning, rename columns etc… in PowerQueryOnline and this is this dataflow that you will share for end users. For that, pick Define new Entities or Link entities from other dataflows.

Like me I’ve created a basic one that is related to my Purchase Cube in my CDM folder/ADLS and after create a new one “Analyze Purchase” Dataflow.

In this Analyze Purchase Dataflow that I will share to my business users, I have linked to my Purchase Cube Dataflow. But in this Analyze Purchase, it’s here where I will do all the prep. and clean stuff.

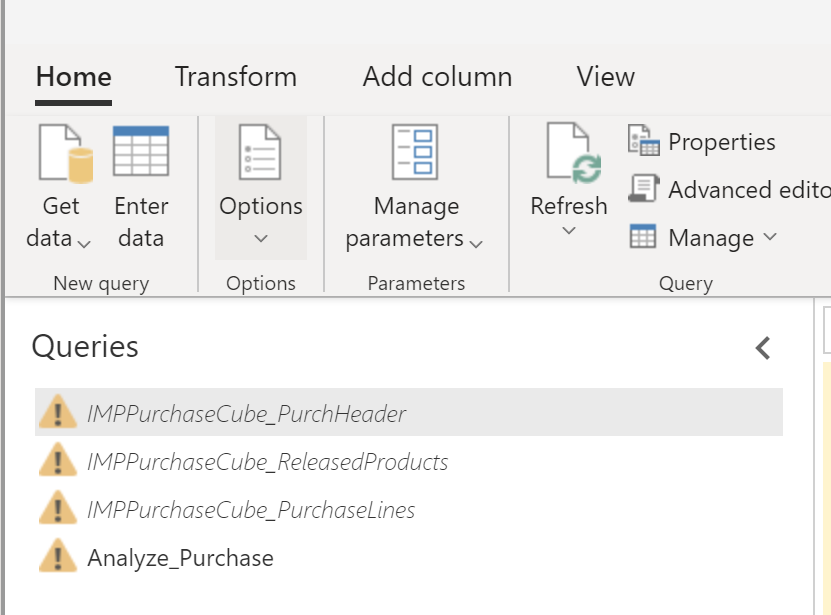

You can see, I have linked to my standard Dataflow for my Purchase Cube dataflow with 3 parts : Purchase Header, Released Products, and Purchase Lines.

The aim after it’s just publish the Analyze_Purchase with a merged query. I have after hidded the 3 tops queries, the end users doesn’t need to see this.

It’s also here in PowerQuery Online where you can add columns, remove them, rename it, filters, group by, SUM : well all transform DATA you need to do before it’s available in PowerBI Desktop.

When it’s done you can setup the Incremental Refresh of your Dataflow here :

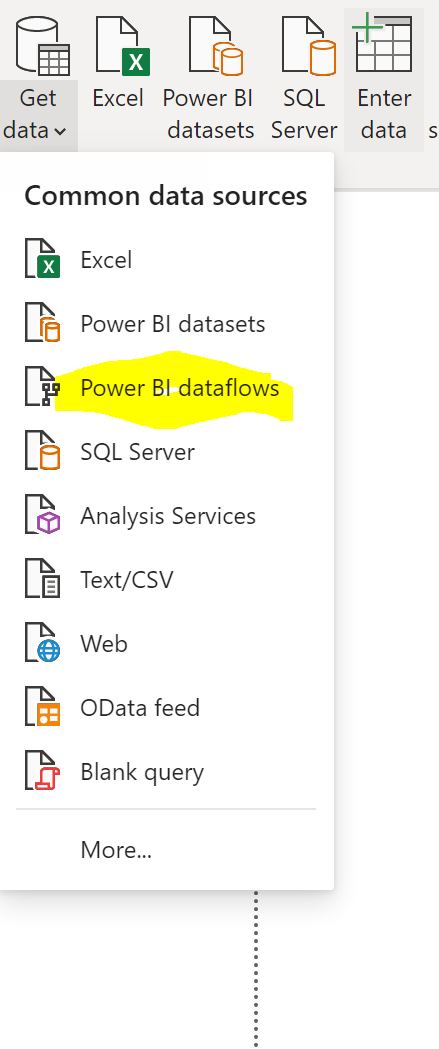

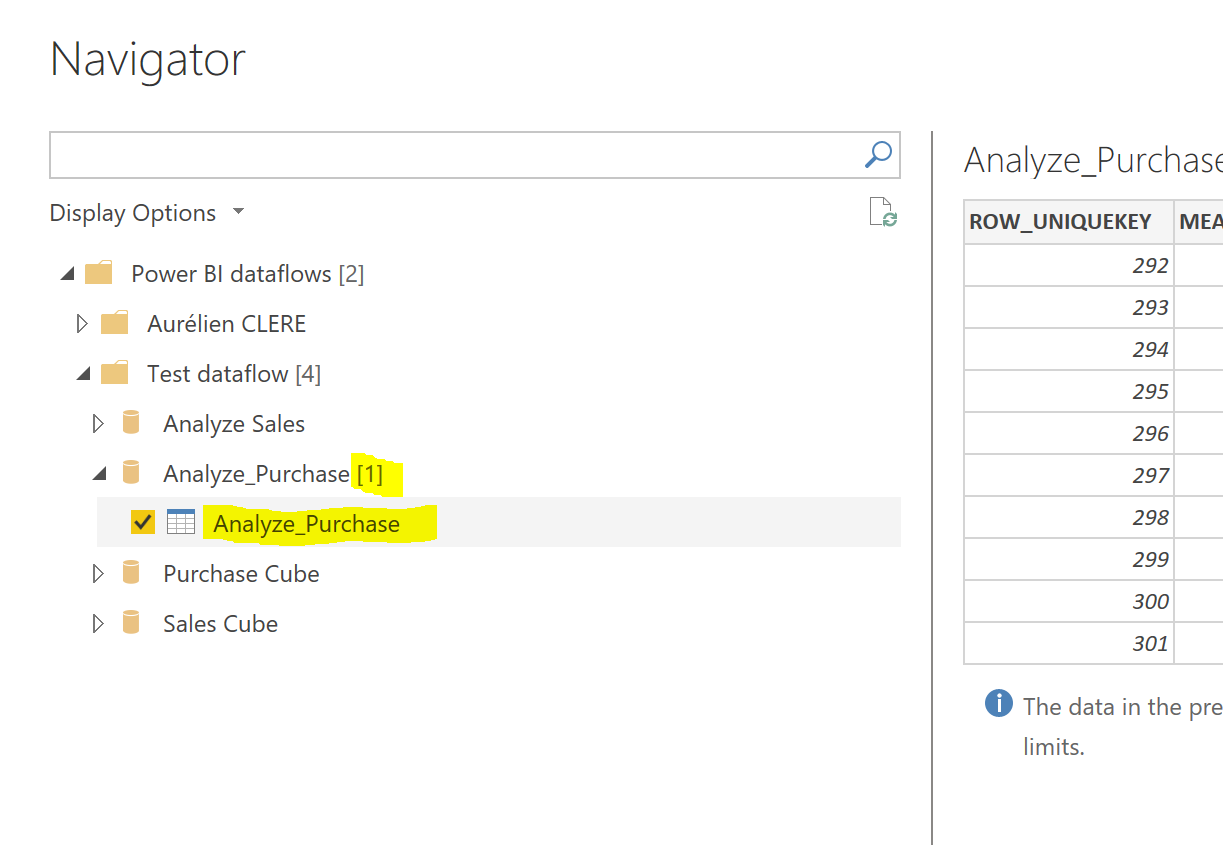

Now if I go in my PowerBI Desktop, you can go PowerBI Dataflows

You can see that my end users will have access only for this simple & global Query !!

The IT team will be responsible to setup & configuration of all Dataflows combined of the new main architecture with ADLS in Dynamics 365 F&O ; but end users will be responsible to do after their report with just 1 combined merge query with renamed columns, filtering, group by sum well all DATA sources prepared for them so that they can really focus on what’s important : easy to analyze their business needs !!

One more thing…. (like Apple does) , you can add other datasources directly in Dataflow of PowerBI. You can use other connectors in PowerQuery Online. So that if you want to combine the DATA coming from the ERP with ADLS and like SalesForce Data or also CDS / Dynamics365 Sales : you can :)

Hope you like this article ! Feel free to share it.

For the French Community, I also done a Youtube video :